Artists have banded together with academics to combat copycat AI that examines their work and attempts to imitate their techniques. After discovering that multiple artificial intelligence (AI) models were being “trained” using her art without giving her credit or money, American illustrator Paloma McClain moved into defense mode.

“It bothered me,” McClain told AFP. “I believe truly meaningful technological advancement is done ethically and elevates all people instead of functioning at the expense of others.”

Glaze, developed by academics at “the University of Chicago,” is a free program that the artist utilized. By manipulating pixels in ways that humans cannot tell, Glaze effectively out-plans AI models during training, giving the impression that a digital artwork looks very different from the algorithms.

“We’re providing technical tools to help protect human creators against invasive and abusive AI models,” stated Ben Zhao, a computer science professor and member of the Glaze team. Glaze was born from a four-month sprint and is now a leading facial recognition system disruptor.

“We were working at super-fast speed because we knew the problem was serious,” Zhao said of the team’s haste to protect artists against software mimics. “A lot of people were in pain.”

The vast bulk of the “digital images, audio, and text that make up “the way supersmart software thinks” was recently scraped off the web without explicit consent, although generative AI giants possess contracts to utilize data for training in some situations.

According to Zhao, Glaze has had over 1.5 million downloads since its launch in March 2023. Zhao’s group is developing a Glaze improvement named Nightshade to beef up defenses. For example, this will trick AI into thinking a dog is a cat.

To paraphrase McClain, “I believe Nightshade will have a noticeable effect if enough artists use it and put enough poisoned images into the wild,” here means readily available online. “According to Nightshade’s research, it wouldn’t take as many poisoned images as one might think.”

Based on the Chicago academic, Zhao’s group has received approaches from other companies interested in implementing Nightshade. “The goal is for people to be able to protect their content, whether it’s individual artists or companies with a lot of intellectual property,” Zhao added.

The Kudurru software, created by startup Spawning, can identify efforts to capture massive amounts of photos from a website. Spawning co-founder Jordan Meyer claims that artists can derail requests or submit inappropriate photos, which can contaminate the data used to train AI. The Kudurru network currently includes over a thousand websites.

In addition, Spawning has released “haveibeentrained.com,” a website with an online tool that lets artists know if their digital artworks are being fed into an AI model and gives them the option to decline future usage of such works.

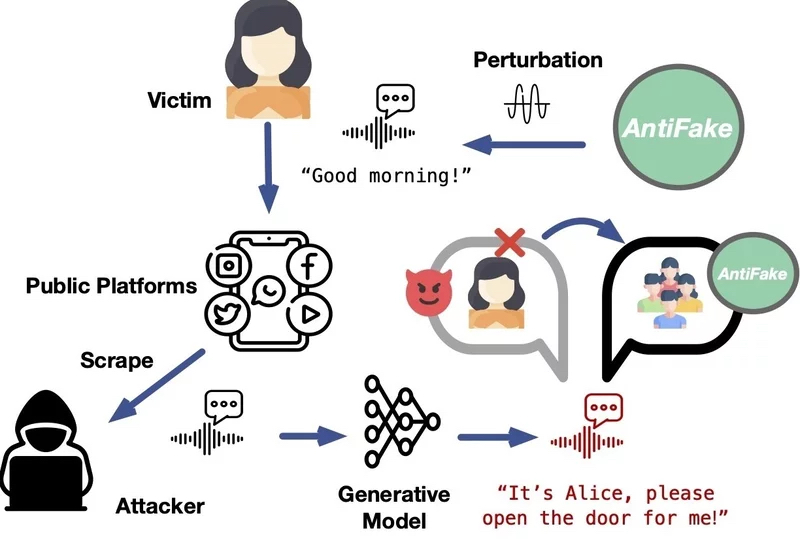

At Washington University in Missouri, researchers have created AntiFake software to combat AI that mimics voices, just as picture defenses are ramping up. PhD candidate Zhiyuan Yu described AntiFake as an enhancement tool for digital recordings of human speech that makes it “impossible to synthesize a human voice” by inserting background noise humans cannot hear.

Beyond halting the unlawful training of AI, the program’s stated goal is to stop the development of “deepfakes“—videos or soundtracks that falsely portray famous people, politicians, or families saying or doing something they didn’t do.

According to Zhiyuan Yu, the AntiFake team was recently contacted by a prominent podcast seeking assistance in preventing the hijacking of their programs. The researcher mentioned that while the open-source software has primarily been utilized for voice recordings, it may also be utilized for musical compositions.

“The best solution would be a world in which all data used for AI is subject to consent and payment,” said Meyer. “We hope to push developers in this direction.”