The site had previously declared that starting in 2024, authors would have to show content produced by AI. However, labels operate on an honor system, just like other creative sites. YouTube today introduced a feature that allows content producers to self-identify as using artificial intelligence (AI) in their videos.

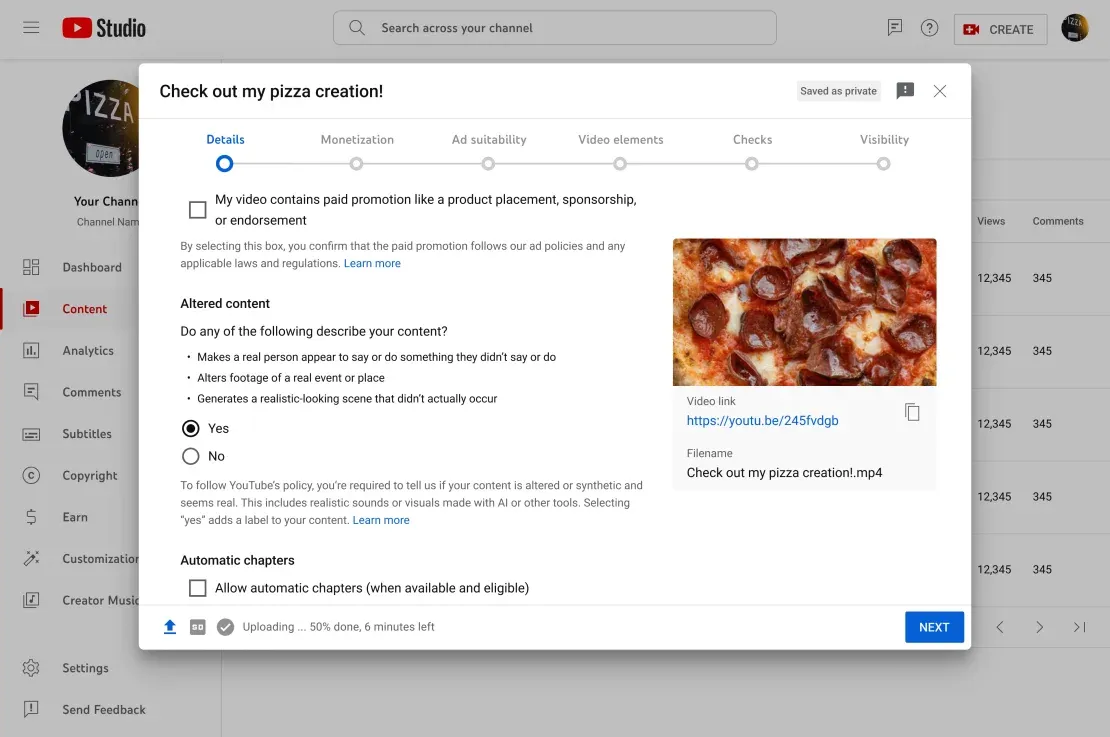

During the uploading and publishing procedure, a checkbox will appear, requiring content providers to disclose any modified or synthetic material that appears genuine. This covers things like editing films of actual events and locations, portraying an actual individual doing or saying a thing they did not, or presenting a scene that looks real but did not really occur. A few examples of what YouTube offers are deepfake voices to narrate a film with an actual individual or a fake storm heading toward a real community.

However, for items like beautifying effects, special effects that were like backdrop blur, and obviously unreal stuff like animation, disclaimers won’t be necessary.

YouTube announced its content generated by artificial intelligence policy in November, effectively dividing the restrictions into two categories: strict guidelines protecting artists and record labels and more flexible standards for the general public. The artist’s label has the authority to remove deepfake audio, such as Drake rapping a song composed by someone else or singing Ice Spice.

YouTube stated that creators will have to reveal AI-generated content as an element of these guidelines, although it has not yet specified precisely how the company would do it. It might also be more difficult to get your deepfake removed if you’re an ordinary person on YouTube. You need to submit a privacy request form for consideration by the corporation. YouTube stated in today’s post that it is still working toward an improved privacy procedure, but it declined to offer much information about it.

YouTube’s function is based on the honor system, similar to other mediums that have implemented AI content labels. Content providers are required to be truthful about what appears in their films. Although Artificial detection software has a long history of being extremely incorrect, YouTube representative Jack Malon recently told The Verge that the business was investing in the capabilities to detect AI-generated content.

YouTube notes in a blog post today that it may include an artificial intelligence notice to films even in cases where the uploader has not done so oneself, particularly in cases where the modified or synthesized content could mislead or confuse viewers. Additionally, the motion picture content will include more visible labeling for delicate subjects including economics, politics, and health.