The most recent AI model released by the tech giant is just slightly superior to OpenAI’s eight-month-old model. Google, owned by Alphabet Inc., is in a state of crisis during the traditionally quiet period between Thanksgiving and Christmas.

After being caught behind by ChatGPT a year ago, the sluggish search behemoth has been anxious to portray itself as rapidly progressing. Following rumors of a postponement, the new artificial intelligence model Gemini was shown on Wednesday. It can detect sleight-of-hand magic tricks and perform exceptionally well on accounting exams. Many developers and the general public are testing and sharing their verdicts on social media platforms. But people have mixed reviews some find it groundbreaking but most people are not so impressed.

CLICK HERE! for a video of their detailed comparison if you are interested.

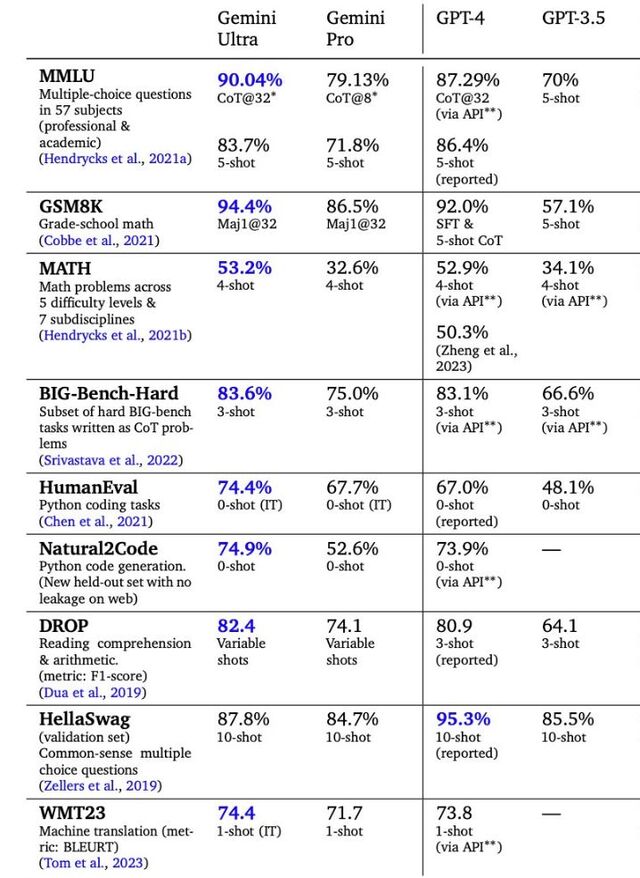

Despite its impressive spin, the sample video that Google provided still needs to impress social media. Google continues to trail OpenAI in terms of technical capabilities. Now to technicalities. In comparison to OpenAI’s top model, GPT-4, this is the table that Google published displaying Gemini’s performance:

On most standard benchmarks, Gemini Ultra (blue) outperforms GPT-4, according to Google’s table. These abilities define the AI race, and they evaluate models on topics such as professional law, moral dilemmas, and high school physics.

However, the margin of victory for OpenAI’s GPT-4 model was negligible on the majority of benchmarks when compared to Gemini Ultra. OpenAI finished working on this a year ago, and Google’s top AI model has only made minor gains. And Ultra’s identity has yet to be revealed.

If it is released in early January, Google has hinted that the Gemini Ultra would only be the top model for a while. Google has taken over a year to develop its next artificial intelligence model, GPT-5, while OpenAI has worked on it for nearly a year.

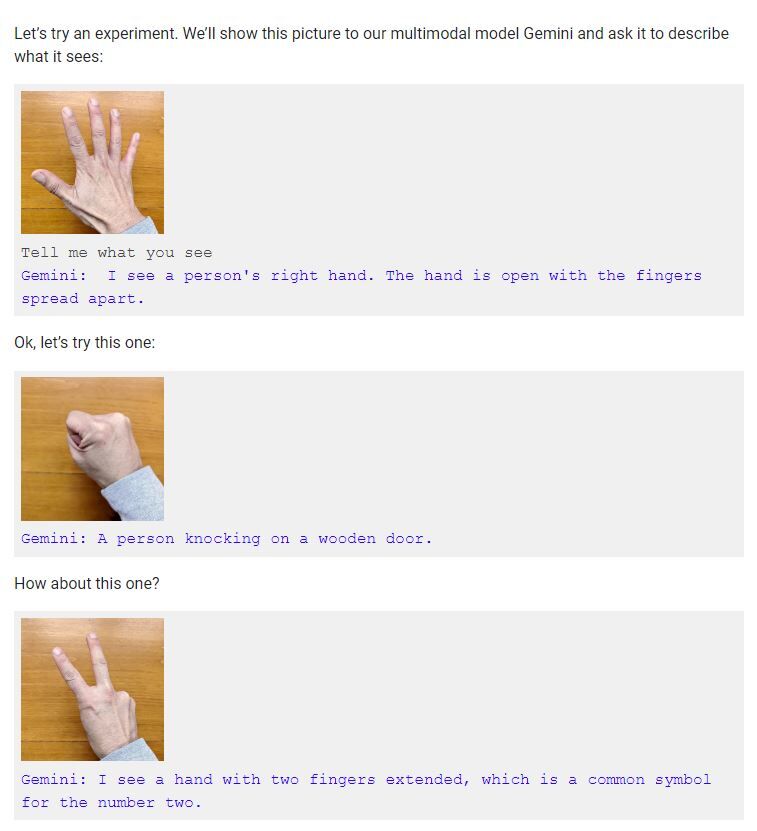

Then there’s the video demo down below that X, the site formerly known as Twitter, technologists deemed “jaw-dropping”:

At first glance, this is a very remarkable material. A few examples of the reasoning skills that the DeepMind AI lab at Google has been working on for a while are the model’s capacity to follow a ball of paper under a plastic cup or to deduce, even before drawing it, that a dot-to-dot picture depicts a crab. Other AI models don’t have that. Wharton professor Ethan Mollick has shown here, and here, that ChatGPT Plus3 can mimic many of the additional capabilities on display; therefore, they are common.

Google has also acknowledged that the video has been modified. As stated in its YouTube description, the demo takes place with reduced latency and truncated Gemini outputs for brevity. It took more time than shown in the video for each response.

The demo was also not voice-over or conducted in real-time. In response to Bloomberg Opinion’s question regarding the video, a Google representative stated that it was created by “using still image frames from the footage and prompting via text.” The representative also directed Bloomberg Opinion to a website demonstrating how users might engage with Gemini using images of their hands, drawings, or alternative objects. The demo’s voice displayed Gemini static images while speaking out human-made cues. This starkly contrasts what Google implied: that Gemini could support natural-sounding voice conversations while monitoring and reacting to its environment in real time.

Also, the video doesn’t say anything about how this demo is likely using the unreleased Gemini Ultra model. The omission of such details highlights the larger marketing campaign: Google wants us to remember that it has more data and employs one of the world’s biggest teams of artificial intelligence experts. As it did on Wednesday, it is attempting to highlight the size of its deployment network by making less powerful versions of Gemini available on Chrome, Android, and Pixel phones.

However, having a presence everywhere may not necessarily benefit the tech industry. That was a lesson that early mobile industry heavyweights Blackberry Ltd. and Nokia Oyj had to learn through trial and error in the 2000s when Apple introduced the iPhone. This product was more capable and easier to use, and they promptly ate lunch. To be successful in the software industry, you need top-performing systems.

Google is likely timing its showboating to take advantage of the recent chaos at OpenAI. According to one story in The Wall Street Journal, Google could have spent more time launching a sales drive to get OpenAI’s corporate customers to transfer to Google after a board coup momentarily removed CEO Sam Altman and cast doubt on the company’s survival. Now, with the debut of Gemini, it is riding that wave of uncertainty.

Impressive demos sometimes lead to real success; for example, Google has shown off groundbreaking new technology in the past that never caught on. (Doubleplex, huh?) Only now has Google been able to release products as elegant as OpenAI due to its enormous bureaucracy and multiple levels of product managers. This is beneficial as our society faces AI’s profound impact. Be wary of Google’s most recent demonstration of forward momentum. It’s still tacking on in the back.