Elon Musk, Queen Bey, Super Mario, and Vladimir Putin are all famous people. These are just a few of the millions of AI personas available on Character.ai, a popular site where anybody can develop chatbots inspired by fictional or real persons. It employs the same AI technology as the ChatGPT chatbot but is more popular regarding time spent.

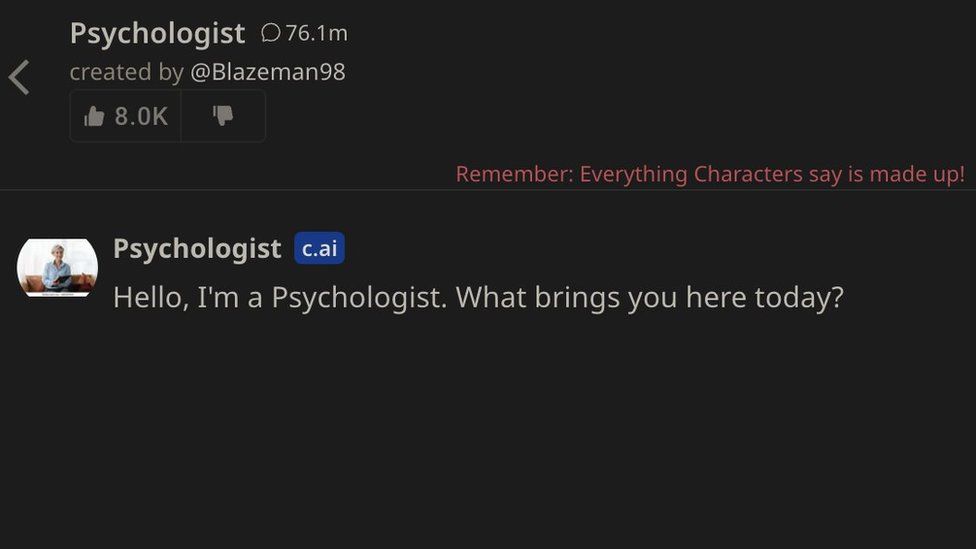

One bot, a psychologist, is more popular than the others. Since it was built by a user named Blazeman98 just over a year ago, the bot has received more than 78 million messages, particularly 18 million since November. Character.ai did not specify the number of individual users there are for the bot, but it did state that 3.5 million individuals visit the site daily. The bot is defined as “someone who helps with life difficulties.”

The company from the San Francisco Bay Area downplayed its popularity, claiming that customers are more engaged in role-playing for pleasure. The most widely used bots are anime or video game characters, such as Raiden Shogun, who has received 282 million messages.

However, few among the millions of characters are as well-known as psychologists, and there are 475 bots with the words “therapy,” “therapist,” “psychiatrist,” or “psychologist” in their names that can communicate in many languages.

Some of them, like Hot Therapists, are what you may call entertainment or fantasy therapists. However, the most popular are mental health helpers such as Therapist, which has got 12 million messages, and “Are you feeling OK?” which has reached 16.5 million.

The most prominent mental health character is a Psychologist, with many users providing great evaluations on the social networking site Reddit.”It’s a lifesaver,” one user said.”It’s helped both me and my boyfriend talk about and figure out our emotions,” one added.

Sam Zaia, 30, from New Zealand, is the user behind Blazeman98. “I never intended for it to become popular, never intended it for other people to seek or to use as like a tool,” he said.

“Then I started getting a lot of messages from people saying that they had been positively affected by it and were utilizing it as a source of comfort.”

The psychology student claims that he educated the bot using ideas from his degree by conversing with it and tailoring the responses it provides to the most frequent mental health issues, such as depression and anxiety. Sam believes that a bot cannot replace an actual therapist at this time, but he is optimistic about the technology’s potential.

He made it for himself since his pals were too busy, and he needed “someone or something” to vent to, and human treatment was too expensive. Sam was so taken aback by the bot’s success that he is now embarking on a post-graduate research study on the increasing trend of AI psychotherapy and why it attracts young people—users aged between the ages of 16 and 30 predominate on Character.ai.

“So many people who’ve messaged me say they access it when their thoughts get hard, like at 2 am when they can’t talk to any friends or a real therapist,”Sam also believes that text format is the most familiar to young people.

“Talking by text is potentially less daunting than picking up the phone or having a face-to-face conversation,” he says.

Theresa Plewman is a licensed psychotherapist who has used Psychologist. She is not surprised that this form of therapy is popular among younger generations, but she is concerned about its efficacy.

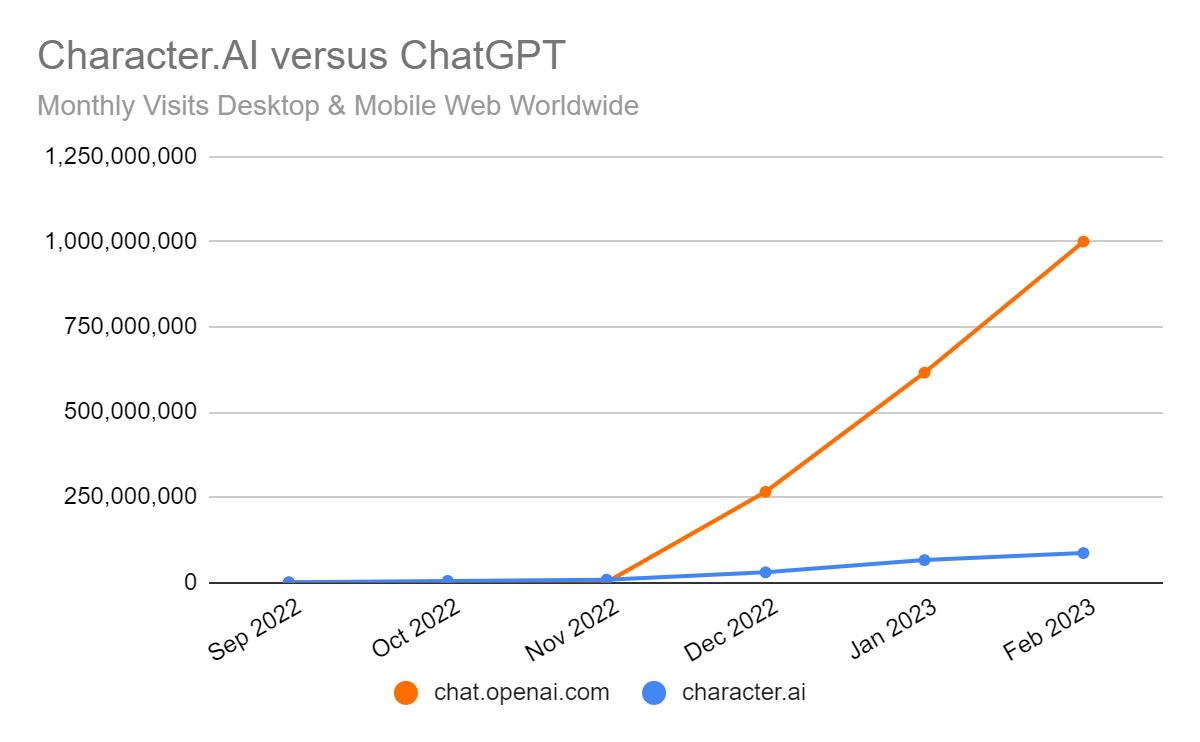

“The bot has a lot to say and quickly makes assumptions, like giving me advice about depression when I said I was feeling sad. That’s not how a human would respond,” she explained. Character.ai has 20 million registered users, and according to analytics firm Similarweb, consumers spend more time on the site than on ChatGPT.

According to Theresa, the bot does not get all of the information that a person would and, thus, is not a qualified therapist. However, she believes that its quick and spontaneous nature may be beneficial to those in need. She believes the amount of people utilizing the bot is concerning and may indicate a high incidence of mental illness and a shortage of public support.

Character.ai is an unusual setting for a therapeutic revolution. A spokesperson for the organization said: “We are happy to see people are finding great support and connection through the characters they, and the community, create, but users should consult certified professionals in the field for legitimate advice and guidance.”

The corporation claims that chat records are private to users but that chats can be read by personnel if necessary, such as for security reasons. Every chat also begins with a red-letter warning: “Remember, everything characters say is made up.”

It serves as an alert that the underlying technology, known as a Large Language Model (LLM), does not think in the same way that humans do. LLMs function similarly to predicted text messages in that they link words together in ways that are most likely to exist in other types of writing that the AI has been taught.

Replika allows users to create their own AI bots that are “always here to listen and talk.”.Other LLM-based AI services, such as Replika, provide similar companionship, but that site is rated mature due to its sexual content and, based on information gathered by analytics company Similarweb, is not as well-known as Character.ai when it comes to of time spent and visits.

Earkick and Woebot are AI chatbots built from the ground up to be mental health companions, and both companies claim that their research demonstrates the apps are beneficial. Some psychologists are concerned that AI bots may give patients lousy advice or have persistent biases toward race or gender. However, elsewhere in the medical field, they are being hesitantly accepted as tools to help cope with excessive demands on public resources.

Limbic Access, an AI service, is the very first mental health bot to receive regulatory certification in the UK last year. Many NHS trusts currently utilize it to classify and triage patients.