Claude, the newest version of Anthropic’s GenAI technology, was introduced today. The business has hundreds of millions of dollars in venture funding and is backed by Google. The company also claims that its AI chatbot outperforms OpenAI’s GPT-4.

The new GenAI from Anthropic is named Claude 3. There are three models in this family: Claude 3 Haiku, Claude 3 Sonnet, and Claude 3 Opus. Opus is the most powerful of the three. Every one of them demonstrates “increased capabilities” in anthropocentric claims, analysis, and forecasting and outperforms some models on certain benchmarks, including ChatGPT, GPT-4, and Google’s Gemini 1.0 Ultra (although not Gemini 1.5 Pro).

Particularly, Claude 3 is the first GenAI from Anthropic to be able to evaluate both images and text, making it comparable to certain versions of Gemini and GPT-4. From PDFs and slideshows to photographs, charts, graphs, and technical diagrams, it can handle everything.

It can process up to 20 photos at once, which is one more than some of GenAI’s competitors. According to Anthropic, this enables it to assess and contrast photos.

However, Claude 3’s image processing has certain limitations. Anthropic has removed the ability for models to identify individuals, likely due to concerns about potential legal and ethical implications. Furthermore, the business acknowledges that Claude 3 has issues with “low-quality” images (those with fewer than 200 pixels) and has trouble with spatial reasoning tasks (like reading a watch face) and item counting activities (Claude 3 cannot provide precise counts of items in photos).

Claude 3 is likewise unable to produce artwork. At this point, the models are only capable of assessing images. Whether it’s processing text or photos, Anthropic claims that Claude 3 will generally outperform its predecessors in following multi-step instructions, producing structured results in formats like JSON, and conversing in languages other than English. Anthropic states that Claude 3 should have a “more nuanced understanding of requests” and should hence decline to answer inquiries less frequently. The models will soon provide citations for their answers, allowing users to verify them.

“Claude 3 tends to generate more expressive and engaging responses,” reports Anthropic in a supporting article. “[It’s] easier to prompt and steer compared to our legacy models. Users should find that they can achieve the desired results with more concise prompts.”

Some of those enhancements are a result of the broader context of Claude 3. The input data (such as text) that a model takes into account before producing output is referred to as the model’s context or context window. Small context windows in models cause them to “forget” even recent talks’ substance, which causes them to stray from the topic, frequently in dangerous ways. On top of that, large-context models can, in theory, better understand the narrative flow of the data they consume and provide answers that are richer in context.

At first, it will be compatible with a 200,000-token context window, which is roughly 150,000 words, according to Anthropic. However, a small number of clients will be able to upgrade to a 1,000,000-token context window, which is approximately 700,000 words. That is comparable to Google’s most recent GenAI model, the Gemini 1.5 Pro, which is stated above and likewise provides a context window with a maximum of one million tokens.

You can’t assume perfection with Claude 3 simply because it’s an improvement over its predecessor.

Claude 3 shares the same problems as prior GenAI models, including bias and hallucinations, according to a technical whitepaper published by Anthropic. Claude 3 isn’t able to search the web like other GenAI models; these models can only respond to queries using data collected before August 2023. It speaks more than one language, although he’s not quite as proficient in some “low-resource” languages as he is in English…

However, in the next months, Anthropic promises regular upgrades to Claude 3. The company said in a blog post, “We don’t believe that model intelligence is anywhere near its limits, and we plan to release [enhancements] to the Claude 3 model family over the next few months.”Opus and Sonnet can be found on the web, as well as in Anthropic’s developer console and API, Bedrock on Amazon, and Vertex AI on Google. Afterward, this year, we will also have haiku.

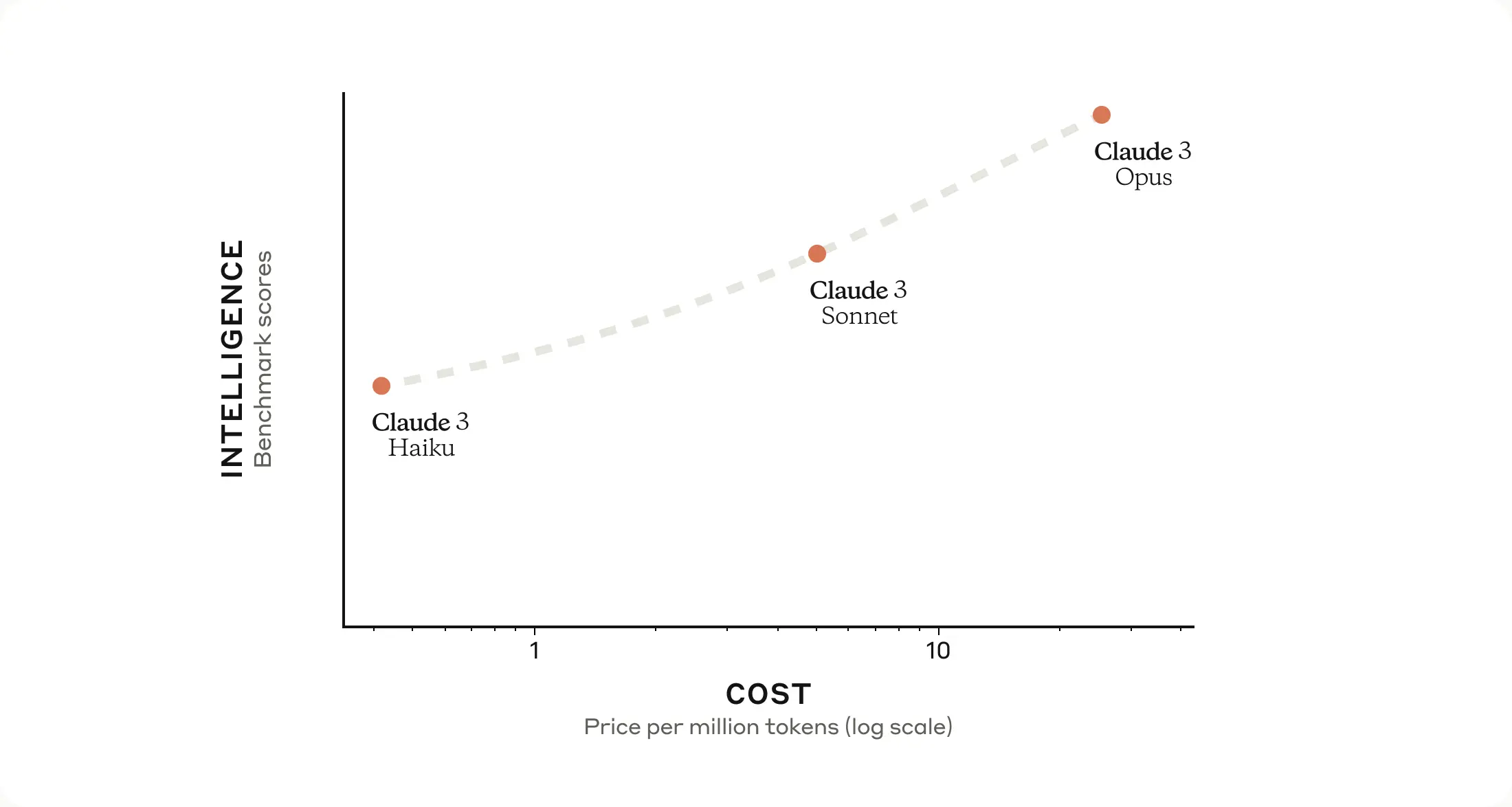

Claude Pricing:

Presented below is the price list:

- Opus: $15 per million for Input tokens and $75 per million for output tokens

- Sonnet: $3 per million input tokens and $15 per million output tokens.

- Haiku: $0.25 per million input tokens and $1.25 per million output tokens.

We previously reported that Anthropic’s long-term goal is to develop a state-of-the-art system for “AI self-teaching.” As we have seen with GPT-4 and other big language models, this kind of algorithm has the potential to power virtual assistants who can do a wide range of tasks, including answering emails, conducting research, and even creating original works of art and novels.

Alluding to this in the previously mentioned blog post, Anthropic mentions that it intends to improve Claude 3’s out-of-the-box capabilities by integrating it with other systems, enabling “interactive” coding, and providing “advanced agentic capabilities.”

This latter part reminds us of OpenAI’s stated goals of developing a software agent for automating complicated processes, such as moving data from one file format to another or filling out expense records and inserting them into accounting software without human intervention. Anthropic appears to be hell-bent on providing features similar to OpenAI’s API, which lets developers include “agent-like experiences” in their products.

Would Anthropic’s next product be an image generator? To be honest, we would be surprised. These days, image generators are hotly debated, mostly for copyright and bias concerns. Recently, Google’s image generator was disabled due to the absurd disregard for historical context with which it inserted diversity into photographs. Legal disputes have broken out between artists and several image-generating companies, who are accused of using artists’ work to train GenAI without giving proper credit or remuneration.

We are interested in watching how Anthropic’s training method for GenAI, “constitutional AI,” develops. The business asserts that this method makes GenAI’s behavior more understandable, predictable, and adaptable. With the help of a straightforward set of principles, constitutional AI seeks to bring AI in line with human intentions by training models to answer questions and carry out activities. As an example, Anthropic stated that it included a principle, based on feedback from the crowd, in Claude 3 that mandates the models be accommodating to individuals with disabilities and easy for them to use.

Anthropic is committed to the long term, regardless of its ultimate goal. The firm hopes to fund $5 billion in the next year or two, according to a May 2018 pitch deck leak. This might be the minimum it needs to compete with OpenAI. (After all, training models isn’t exactly a cheap thing.) With promises and commitments totaling $4 billion from Amazon and $2 billion from Google, plus more than a billion from other donors, it’s off to a good start.