On Wednesday, Meta unveiled “Imagine with Meta AI,” a free, standalone website that utilizes their Emu image synthesis algorithm to generate artificial intelligence images. Meta trained the AI model to generate a new image from a textual prompt using 1.1 billion publicly viewable images on Instagram and Facebook. Instagram, other messaging, and social media applications were the only ones that could use Meta’s version of the technology.

A photo of you (or one you captured) would likely be essential in Emu’s training if you are active on Instagram or Facebook. The ancient adage “If you’re not paying for it, you are the product” has assumed a somewhat different connotation nowadays. Meta utilized a limited sample of Instagram’s total photo library to train its AI model, even though users submitted over 955 million photos every day as of 2016.

You can be assured that your Instagram or Facebook images won’t be used to train Meta’s AI models in the future if you set them to private. That is until the firm decides to modify its policy.

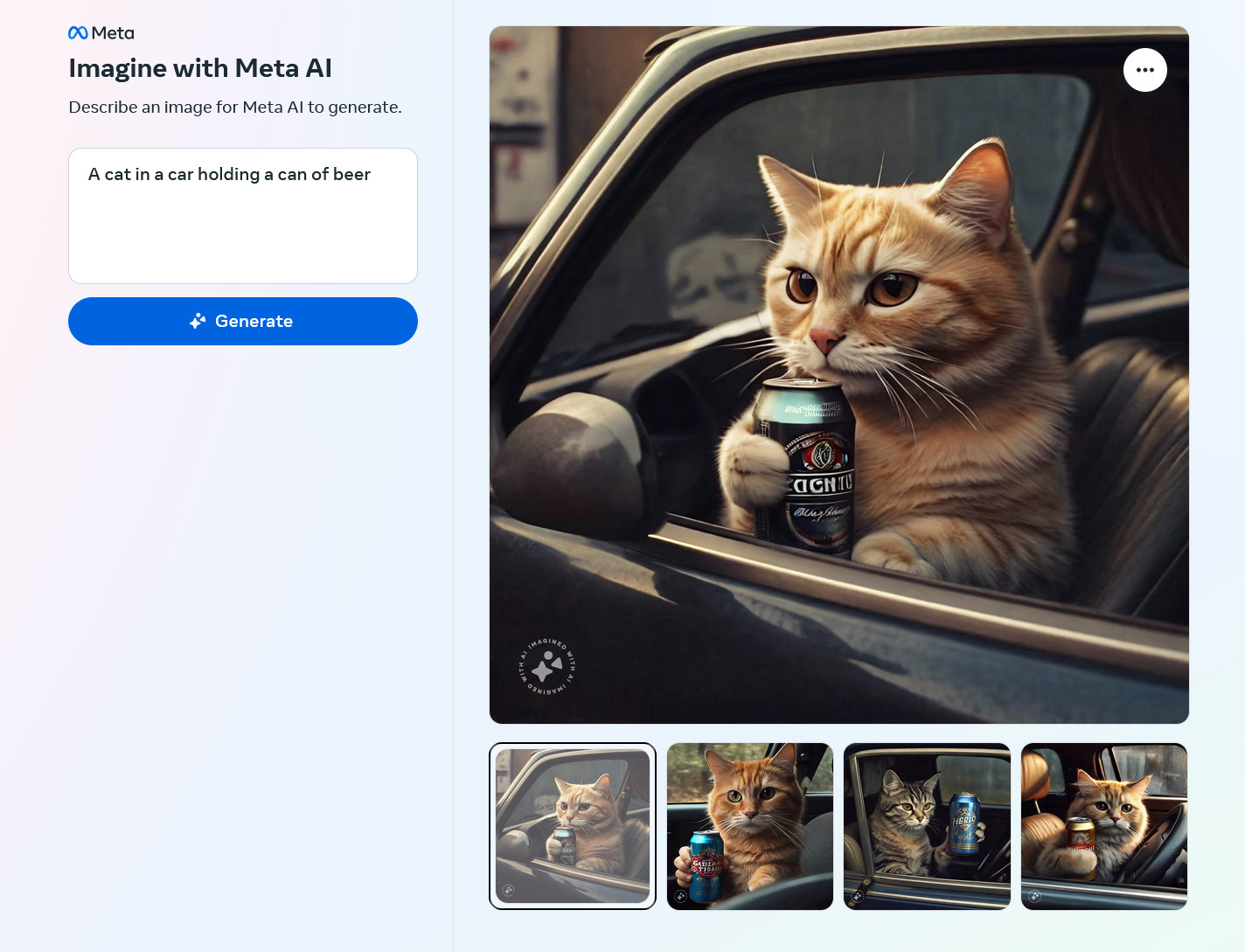

Using what it “knows” about visual concepts learned from the training data, imagine that Meta AI generates new visuals, much like Stable Diffusion, DALL-E 3, and Midjourney. You can import your existing Facebook or Instagram account to create a Meta account, which is necessary for image creation on the new platform. Four JPEG-format photos with dimensions of 1280×1280 pixels are generated for every generation. A little “Imagined with AI” watermark logo appears at the “bottom left-hand corner” of the images.

“We’ve enjoyed hearing from people about how they’re using imagine, Meta AI’s text-to-image generation feature, to make fun and creative content in chats,” according to a release from Meta. “Today, we’re expanding access to imagine outside of chats, making it available in the US to start at imagine.meta.com. This standalone experience for creative hobbyists lets you create images with technology from Emu, our image foundation model.”

Using our”Cat with a Beer” picture synthesis protocols, we ran a series of casual, low-stakes tests on Meta’s new AI image generator and discovered some visually interesting outcomes, as shown up top. (By the way, we found that a lot of the human-generated Emu photographs resembled the average Instagram fashion posts.)

AI-generated images of “a cat in a car holding a can of beer” created by Meta Emu on the “Imagine with Meta AI” website

Compared to Midjourney, Meta’s model does a decent job of producing photorealistic photos. While it may fall short of DALL-E 3, it excels at handling complicated cues compared to Stable Diffusion XL. It manages several media outputs with varying degrees of success, including watercolors, needlework, and pen-and-ink, but it needs to improve at producing text. People from all walks of life and all corners of the globe appear in its depictions. In terms of modern artificial intelligence picture synthesis, it seems to be about average.

Thanks to Instagram and Facebook, this is now a reality.

An AI-generated image of a “psychedelic emu” created on the “Imagine with Meta AI” website

In light of this, what is known about Emu, the AI model that powers Meta’s latest AI picture production capabilities? According to a study published by Meta in September, Emu’s capacity to produce high-quality photos is derived from a procedure known as “quality-tuning.” After pre-training, Emu prioritizes “aesthetic alignment” with a small selection of aesthetically pleasing images, as opposed to conventional text-to-image models that use a vast number of image-text combinations.

However, the above enormous pre-training dataset of 1.1 billion text-image pairs extracted from Instagram and Facebook is at the core of Emu. Meta doesn’t say where they got the training data for Emu or any of their other AI models in the study paper, but news from the Meta Connect 2023 conference quotes Meta’s president of global relations, Nick Clegg, as saying that they did.

Meta’s access to massive amounts of picture and caption data from its services represents a departure from previous approaches taken by AI businesses. Some models for creating synthetic images use photos that have been either illegally downloaded from the web or purchased from commercial image libraries. Out of all the papers we’ve read on major image synthesis models, the one by Meta on Emu is the first one that fails to disclaim the possibility that the model could generate reality-warping misinformation or damaging content. That represents how people have come to terms with the reality of AI image synthesis models, which are now ubiquitous. To what extent that is beneficial is debatable.

There is a tiny disclaimer at the bottom of the website that reads: “Images are and may be inaccurate or inappropriate.” Meta also appears to be dealing with possible harmful outputs with filters and an unfunctional watermarking system (“In the coming weeks, we’ll add invisible watermarking to the image with Meta AI experience for increased transparency and traceability,” the company says).

The 1.1 billion photos utilized to train the model may need to be corrected (can cats drink beer?), and they may not even be ethical in the opinion of the authors who have chosen to remain anonymous. Generating them, nevertheless, is entertaining. Of course, that happiness could be counterbalanced by just as much worry, depending on your mood and your perspective on the rate of AI picture synthesis.