I.Introduction

Generative AI is a family of artificial intelligence algorithms and models that are designed to generate new, often realistic, data samples or material that mimic current examples. Unlike standard AI systems that focus on spotting patterns or generating predictions based on input data, generative AI takes a step further by creating totally new data instances within the same domain as the training data.

This sort of AI, frequently leverages neural networks, particularly generative models, to interpret and duplicate patterns and structures contained in the data it has been trained on. Variational Autoencoders (VAEs), Generative Adversarial Networks (GANs), and autoregressive models are examples of generative AI models.

Generative AI has applications in a variety of domains, including picture and video synthesis, text production, music composition, and others. Its capabilities have aroused both excitement and alarm because of its potential for creative content generation, as well as ethical issues about the development of realistic synthetic content and the risk of misuse, particularly through deepfake technology.

Here is a video to explain Generative AI

Generative AI vs AI

Generative AI is concerned with the creation of new and unique material, chat responses, designs, synthetic data, and even deepfakes. It is beneficial in creative fields and for unique issue-solving because it may develop a wide range of fresh outputs on its own.

As previously said, generative AI is based on neural network approaches such as transformers, GANs, and VAEs. Other types of AI, on the other hand, employ techniques such as convolutional neural networks, recurrent neural networks, and reinforcement learning.

Often, generative AI begins with a prompt that allows a user or data source to enter a starting query or data collection to drive content development. Exploring content variants can be an iterative process. Traditional AI algorithms, on the other hand, frequently process input and provide a result by following a specified set of rules.

Significance of Generative AI in AI

Generative AI is important in artificial intelligence because of its capacity to generate new, realistic data, stimulate creativity, aid in simulation and training, handle data shortages, personalize information, and advance research. It promotes innovation and human-machine collaboration while addressing difficulties in a variety of sectors, while ethical implications, particularly surrounding misuse, must be carefully considered.

Brief history and evolution

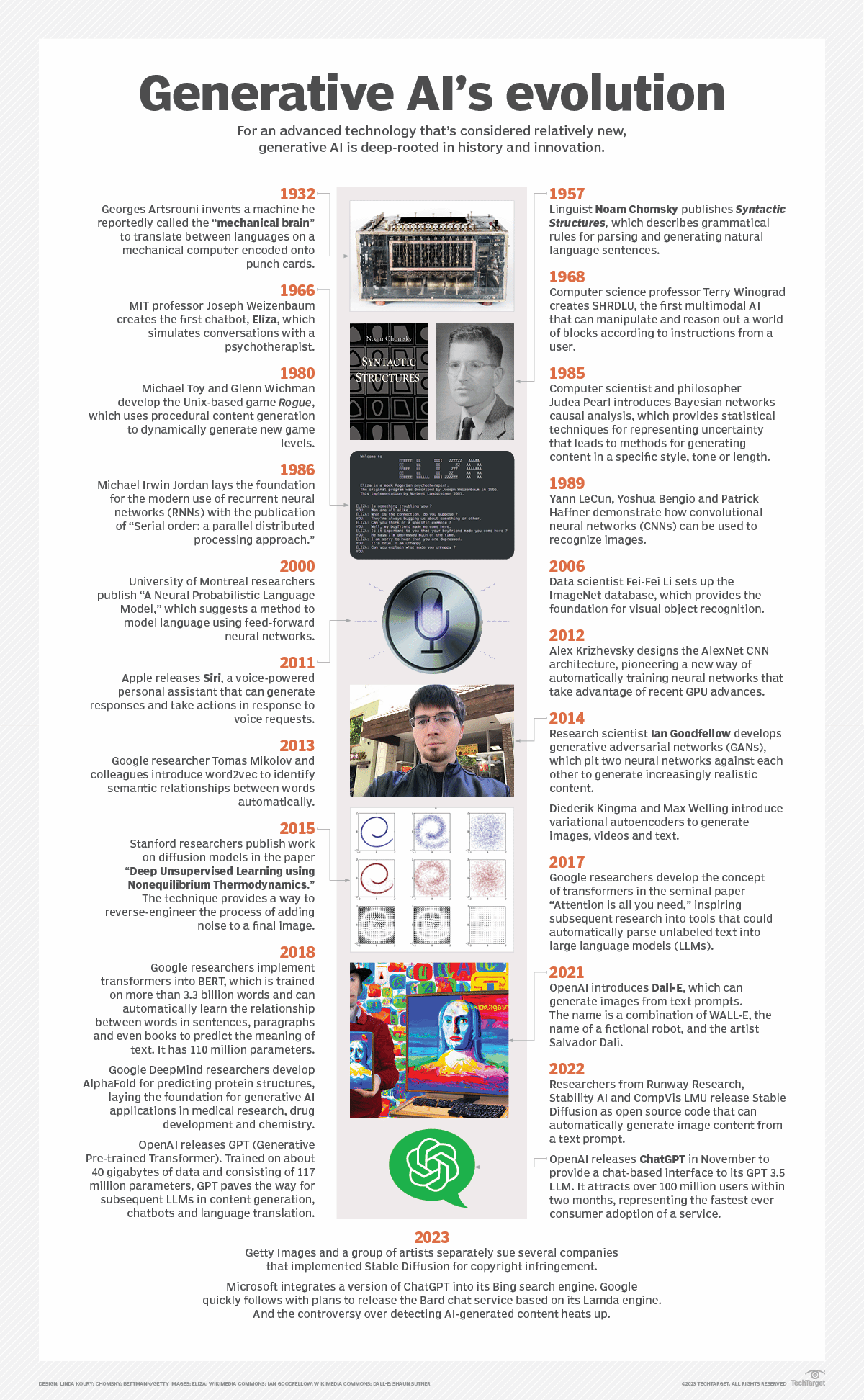

The development of powerful and versatile models with real-world applications has been a continuous process from early theoretical foundations to the growth of generative AI’s history. The progress of generative AI is driven by continuous innovation and research, which opens up new possibilities and difficulties in the area. The following summarizes the history and evolution of generative AI:

- Early Foundations (1950s-2000s): The concept of generative models has its origins in early AI research, with pioneers such as Alan Turing investigating machine-generated material. However, progress could have been improved by processing limitations and a need for more sophisticated algorithms.

- Markov Chain Monte Carlo (MCMC) Methods and Bayesian Approaches (1990s-2000s): For probabilistic modeling, techniques such as MCMC were used, opening the way for Bayesian methods to generative modeling. These approaches, however, were computationally demanding and had limits when dealing with high-dimensional data.

- Autoencoders (2000s-2010s): In the 2000s, autoencoders, a sort of neural network, developed as a generative model. They learned to encode and reconstruct data, but their ability to generate was restricted.

- Variational Autoencoders (VAEs) (2014): VAEs pioneered a probabilistic framework for generative modeling, enabling more flexible and expressive latent space representations. This was a huge step forward in generative AI.

- Generative Adversarial Networks (GANs) (2014): GANs, proposed by Ian Goodfellow and colleagues, transformed generative AI. GANs are made up of a generator and a discriminator that compete to learn, resulting in the generation of highly realistic data. GANs acquired popularity due to their capacity to generate convincing graphics and other information.

- WaveGAN and Text-to-Speech Synthesis (2018): WaveGAN demonstrated the use of GANs to generate realistic audio waveforms in 2018. Concurrently, advances in text-to-speech synthesis have shown the capability of generative models in producing natural-sounding human-like voices.

- OpenAI’s Language Models (2019-present): The publication of models such as GPT-2 and GPT-3 by OpenAI revealed the power of large-scale language models for text production. These models demonstrated the ability to generate coherent and contextually appropriate text on a variety of subjects.

- Continued Progress and Diverse Applications (2020s): Ongoing generative AI research and development continues to push the frontiers of what is achievable. Applications include art, music, healthcare, and many more fields. Researchers are looking into ethical issues and mitigation techniques, especially in the context of deepfake technology.

II.Understanding Generative AI

How it works

Generative AI begins with a prompt, which could be text, an image, a video, a design, musical notes, or any other input that the AI system can handle. In answer to the query, various AI algorithms return fresh content. Essays, problem-solving answers, and convincing fakes made from photographs or the voice of a person are all examples of content.

Early versions of generative AI required data to be submitted via an API or another difficult mechanism. Developers have to become acquainted with specialized tools and create programs in languages such as Python.

Now, generative AI pioneers are creating better user experiences that allow you to articulate a request in plain words. Following an initial reaction, you can further personalize the results by providing comments on the style, tone, and other factors that you want the generated material to reflect.

You can watch this video to understand it better.

What are generative models?

Generative models are a class of artificial intelligence models designed to generate new data samples that resemble a given training dataset. These models learn the underlying patterns and structures in the training data and use this knowledge to create new, similar instances. Generative models play a crucial role in various applications, including image synthesis, text generation, and data augmentation.

Neural networks and generative AI

A key element of artificial intelligence that draws inspiration from the composition and operations of the human brain is neural networks. These networks are made up of linked nodes, or neurons, arranged in layers with a weight assigned to each connection. In order to create predictions or produce new information, neural networks are trained on data to identify patterns, correlations, and representations.

Neural networks are important in the setting of generative artificial intelligence. In particular:

Generative Models: Neural networks are used as generative models, specifically, in architectures such as Variational Autoencoders (VAEs) and Generative Adversarial Networks (GANs) are used. These models are able to produce new, realistic samples that closely resemble the original dataset after learning the training data’s underlying distribution.

Learning Patterns: Neural networks are highly effective in extracting complicated representations and patterns from large amounts of data. This capability is used in generative AI to capture and replicate the properties of the input data while it is being generated.

Transfer Learning: Neural networks that have already been trained, like those used in computer vision or natural language processing, are frequently adjusted for generative tasks. Through the use of transfer learning, performance in one domain can be enhanced in another.

Designs for Various Modalities: The designs of neural networks are customized for particular generative tasks. For example, transformer designs are utilized for various modalities, such as text and images, whereas convolutional neural networks (CNNs) are used for image production and recurrent neural networks (RNNs) for sequence generation.

Adversarial Training: In GANs, adversarial training involves matching two neural networks, a discriminator and a generator, against one another. While the discriminator attempts to discern between actual and produced samples, the generator seeks to produce realistic samples. The generator is forced by this rivalry to provide outputs that are more and more compelling.

In conclusion, neural networks are the foundation of generative artificial intelligence (AI). They allow for the modeling of intricate data distributions, the learning of patterns, and the production of new content in a variety of media, including text and graphics as well as other creative outputs.

Types of Generative Models

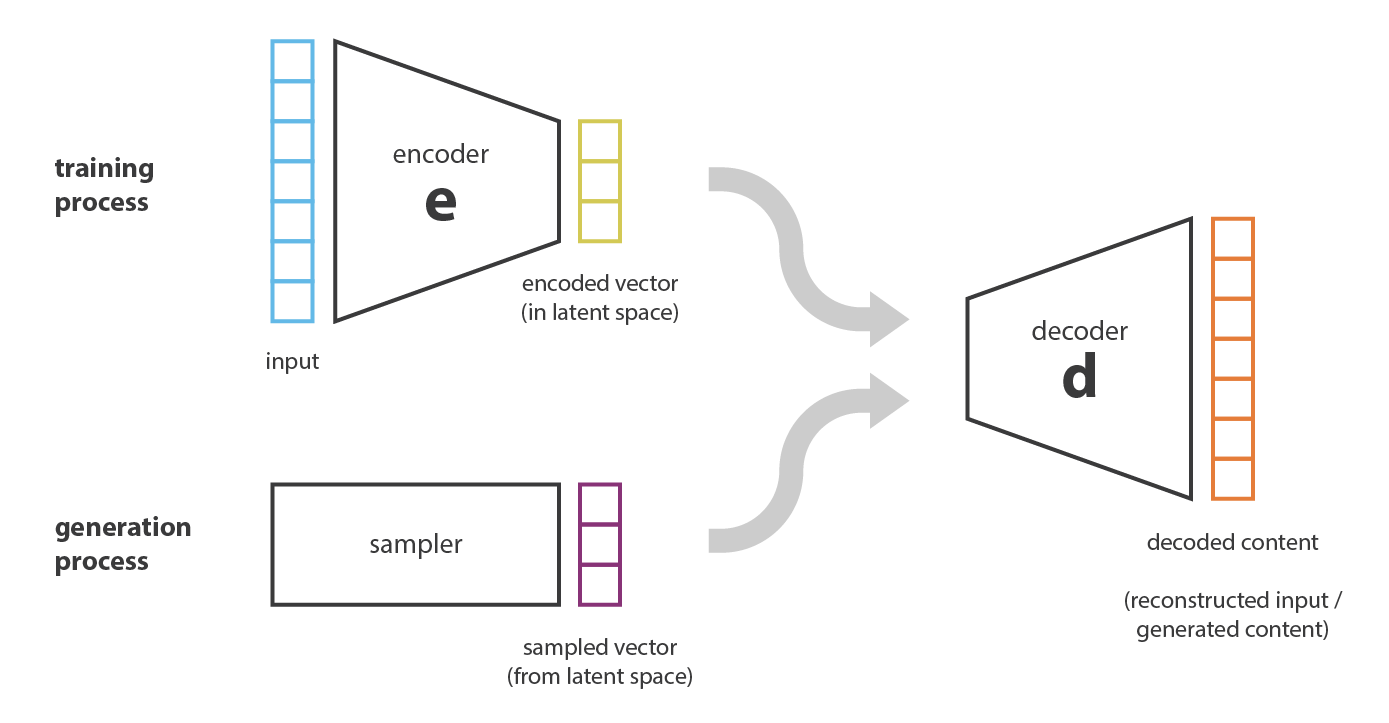

- Variational Autoencoders (VAEs)

Variational Autoencoders (VAEs) are generative models that incorporate a probabilistic framework into the conventional autoencoders. Encoders and decoders are integral components of these systems. Encoders transform input data into a probabilistic distribution stored in a latent space. VAEs balance accurate reconstruction and a well-behaved latent space through the use of an objective function that incorporates a regularization term and a reparameterization trick for efficient training. By virtue of the probabilistic nature of the latent space, VAEs are capable of producing novel and diverse samples. VAE applications include data denoising and image generation; however, the production of indistinct images may present obstacles.

- Generative Adversarial Networks (GANs)

Generative Adversarial Networks (GANs) are adversarial training-based generative models. A generator produces synthetic data, while a discriminator differentiates between authentic and generated information. The generator endeavors to generate authentic data by means of adversarial training, whereas the discriminator enhances its capacity for differentiation. GANs are utilized in style transfer and image synthesis, among other applications. Difficulties include ethical concerns regarding realistic synthetic content, such as deepfakes and training instability.

- Autoregressive Models

Autoregressive models construct data iteratively, with each element’s probability being modeled by its predecessors. PixelCNN and PixelRNN are two examples of image-generation algorithms. These models are utilized in various tasks, such as predicting time series and generating text, despite the computationally intensive nature of the course.

III. Applications of Generative AI

Image Generation

Image generation is significantly aided by generative AI, which enables the production of synthetic images that remarkably resemble real-world instances. The utilization of generative AI in image generation encompasses a broad spectrum of applications, including artistic and design pursuits, as well as practical implementations in data augmentation and computer vision. The generation of synthetic and potentially deceptive visual content entails ethical considerations that must be thoroughly examined despite its manifold advantages. Essential sides of its function in image generation consist of:

- realistic image generation: The generation of realistic images by Generative AI, specifically Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs), is capable of producing realistic, high-quality images. By acquiring knowledge of the fundamental structures and patterns present in the training data, these models generate novel images.

- Data Augmentation: For data augmentation, machine learning employs generative models. The dataset size can be expanded through the generation of supplementary synthetic images, thereby augmenting the resilience and applicability of image classifiers and other computer vision models.

- Style transfer: It is a technique that enables the artistic style of one image to be adopted by another through the use of generative models. This innovative application has artistic and design ramifications.

- Image-to-Image Translation: Generative models demonstrate exceptional performance in image-to-image translation tasks, which involve converting an input image to a different domain while maintaining its fundamental attributes. This may involve converting satellite images to maps or translating images from day to night.

- Super-Resolution: By producing high-resolution images from lower-resolution inputs, generative models contribute to super-resolution tasks. This feature proves to be highly advantageous in situations that demand precise images, such as medical imaging or surveillance footage enhancement.

- Creative Art and Design: The utilization of generative AI has been observed in the realm of creative disciplines, where it is employed to generate art and design by synthesizing novel visual components. Generative models are utilized by artists and designers in order to investigate novel concepts and aesthetics.

- Deepfake technology: Generative AI is employed in the production of deepfake content, wherein features are convincingly substituted in images or videos. This practice is controversial at best. While this technology does demonstrate the capacity of generative models to manipulate visual content, it also raises ethical concerns.

- Conditional Image Generation: Certain generative models provide the capability for conditional image generation, which enables the control or specification of particular attributes or features of the generated image. This facilitates the generation of images possessing specific attributes.

Text Generation

Generative AI is important in text generation, contributing to a variety of applications and creative efforts. With the development of sophisticated models and architectures, generative AI’s role in text production has rapidly expanded. While AI delivers benefits and economies, ethical concerns such as prejudice in language production and misinformation must be handled carefully. Here are some of the most important characteristics of its involvement in text generation:

- Content Generation: Generative models, such as language models based on recurrent neural networks (RNNs), or transformer architectures, such as GPT (Generative Pre-trained Transformer), may produce coherent and contextually appropriate content. This talent is useful for creating content such as articles, stories, and creative writing.

- Language Translation: Generative models are used in language translation tasks to create text translations from one language to another. Transformer models, in particular, have shown cutting-edge performance in multilingual translation jobs.

- Dialog Generation: Generative AI is used in chatbots and virtual assistants to generate human-like natural language responses. This is accomplished by training the model on massive datasets of talks, which allows the model to recognize context and create relevant responses.

- Code Generation: Generative models can help with code generation in software development. They can produce code snippets from natural language descriptions, as well as convert high-level specifications into executable code.

- Content Summarization: Generative models help with automatic text summarization by producing short and valuable summaries of lengthier texts. This is useful for processing large amounts of data and extracting critical insights.

- Poetry and Creative Writing: Generative AI has been used to generate poetry and other forms of creative writing. Models can develop distinctive and innovative textual outputs by learning patterns from large datasets.

- Personal Assistants and Voice Interfaces: Generative AI is used in voice interfaces to translate spoken words into text in addition to producing text. This technology drives virtual assistants and allows for voice interactions and orders.

- Conditional Text creation: Some generative models support conditional text creation, which allows you to control specific attributes or themes. This allows for the creation of text with specific properties or inside predetermined settings.

- Storytelling and Narrative Generation: Generative models help with interactive storytelling and narrative generation by synthesizing text dynamically based on user inputs or established plot structures. This technology has potential applications in gaming, education, and immersive experiences.

Music and Audio Generation

Generative AI has a transformational impact on music and audio generation, contributing to both creative and practical undertakings. The impact of generative AI on music and audio production is multifaceted, spanning from assisting musicians and composers in their creative processes to enabling novel applications in sound design, voice synthesis, and interactive music experiences. As with other creative fields, ethical concerns about the originality and attribution of generated content must be addressed. Here are some of the most important characteristics of its role in this domain:

- Music Composition: Using patterns learned from existing musical compositions, generative models may generate music independently. Original pieces of music in various genres have been created using recurrent neural networks (RNNs) and transformer-based models.

- MIDI Generation: Using generative models, MIDI (Musical Instrument Digital Interface) data can be generated. This enables the creation of digital music, which may then be processed, manipulated, and played using virtual or physical instruments.

- Harmony and Melody Generation: Generative AI can help with harmonies and melodies. Models can learn from current musical structures and develop new arrangements or improvisations, assisting composers and musicians in their creative process.

- Audio Synthesis and Sound Design: Generative models help in audio synthesis and sound design by generating new sounds or modifying old ones. This is useful in the creation of music, movies, and other media that require distinctive and immersive audio experiences.

- Style Transfer and Remixing: Generative AI style transfer approaches enable the transformation of music into new styles. This can involve remixing songs from other genres or altering a piece of music to sound like a specific performer.

- Voice and Speech Synthesis: In order to create natural-sounding voices, generative models are utilized in voice synthesis. This technology is used in virtual assistants, audiobooks, and voiceovers, and it provides a diverse tool for producing spoken information.

- Automatic Accompaniment: Generative AI may automatically generate accompaniment for existing tunes or vocals. This is useful for musicians who want to try out different arrangements and musical accompaniments.

- Interactive and adaptable Music: Generative methods help to create interactive and adaptable musical experiences. These models may respond to user inputs in real-time, creating dynamic and individualized musical compositions.

- Augmenting Data in Audio Training Sets: To supplement training datasets in machine learning for audio processing, generative models are utilized. This entails creating more synthetic audio samples to increase the diversity of the data and the resilience of machine learning models.

Video Generation

Generative AI is important in video generation, as it contributes to both artistic and practical applications. The importance of generative AI in video generation ranges from creative expression and content creation to practical applications in data augmentation and virtual production. As with other generative AI applications, ethical concerns about the possible exploitation of video modification technologies must be addressed. Here are some of the most important characteristics of its role in this domain:

- Deepfake Technology: Generative models are frequently employed in deepfake technology, where they may change videos to replace faces or alter content convincingly. While this presents ethical problems, it does demonstrate the power of generative AI in video modification.

- Video Synthesis and Animation: Generative models, specifically Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs), can produce realistic and diverse video sequences. This has uses in video game design, animation, and the generation of virtual reality material.

- Content Production and Storyboarding: Generative models help with content production by producing video sequences and assisting with storyboarding. This can be useful during the pre-production phase of a film or video game.

- Aesthetics and Style Transfer: Similarly, to its role in image generation, generative AI can be used to transfer style to videos. This is altering a film’s visual style to mirror the qualities of another, adding to creative and artistic expressions in video content.

- Data Augmentation for Video Datasets: For data augmentation in machine learning for video analysis, generative models are used. These models generate synthetic video samples that increase the diversity of training datasets, boosting the performance and generalization of video analysis algorithms.

- Video-to-Video Translation: Generative models are employed for video-to-video translation, which involves transforming the content of a video in one domain into another. This can involve activities such as turning day scenes into night situations or transforming shaky cartoons into realistic films.

- Interactive and Dynamic Content: Generative AI makes it possible to create interactive and dynamic video content. This is especially useful in applications like virtual worlds, where generative models may respond to user inputs and generate adaptive video content in real-time.

- Virtual Production: Generative AI contributes to virtual production in the film and entertainment industries. This entails the real-time creation of digital worlds and effects, hence increasing the efficiency and creative possibilities of filmmaking.

- Video Quality Enhancement: Generative models can be used to improve video quality by improving resolution, reducing noise, or recovering missing information. This is useful in situations where high-quality video content is essential.

IV. Challenges and Ethical Considerations

While generative AI has various benefits and uses, it also presents significant ethical concerns. Addressing these ethical challenges necessitates a multifaceted approach involving researchers, industry stakeholders, legislators, and the general public. To mitigate potential hazards and ensure ethical usage of generative AI, guidelines, standards, and responsible procedures for its development and deployment are required. Here are some of the most important ethical issues of generative AI:

- Deep Fakes and Misinformation: Concerns have been raised concerning the ability of generative models to generate realistic synthetic content, such as deepfake movies and photos. This involves disseminating false information, fabricating evidence, and creating content with the goal of deception.

- Privacy Concerns: Generative AI can be used to create synthetic images of people, possibly jeopardizing their privacy. This has ramifications for personal security and raises issues of consent and ethical use of created content.

- Bias and Fairness: When trained on biased datasets, generative models can perpetuate and even exacerbate existing data biases. This raises questions about fairness and equity, mainly when the created information includes human characteristics like gender, color, or age.

- Intellectual Property Issues: Because generative models can produce content that closely resembles prior works, concerns concerning intellectual property rights and copyright violations arise. It might be challenging to determine who owns and has rights to generated content.

- Authenticity and Manipulation: The potential to create more realistic content raises concerns about the integrity of visual and aural data. This has ramifications for media credibility and calls into question existing techniques of content verification.

- Impacts on Employment: Generative AI can automate creative jobs, which will have an impact on professions involving content creation, such as graphic design and some areas of video production. This raises concerns regarding human worker displacement and the necessity for retraining in developing industries.

- Security Risks: Generative AI can be exploited to generate synthetic data for evil purposes, such as fabricating plausible but fabricated biometric data or forged identity documents. This creates security vulnerabilities and calls into question established authentication methods.

- Unforeseen repercussions: The use of generative models in a variety of disciplines can have unforeseen repercussions. For example, the deployment of AI-generated material in social media or news platforms may have unanticipated consequences for public opinion, social discourse, and political landscapes.

- Inadequate Regulation and Standards: The fast development and deployment of generative AI technology has overtaken the development and adoption of explicit norms and standards. Because of the lack of regulatory frameworks, these technologies may be used in an uncontrolled and potentially destructive manner.

Limitations of Generative AI:

Early generative AI systems vividly demonstrate its numerous shortcomings. Some of the difficulties that generative AI poses stem from the various methodologies employed to achieve distinct use cases. A summary of a complex issue, for example, is easier to read than an explanation with multiple sources supporting essential points. The summary’s readability comes at the expense of a user’s capacity to validate where the information originates from.

Here are some limits to consider while developing or deploying a generative AI app:

- It only sometimes indicates the source of the content.

- It might be challenging to assess the bias of primary sources.

- Realistic-sounding content makes it more difficult to recognize incorrect information.

- Understanding how to tune in to new situations might take a lot of work.

- The results can obscure bias, prejudice, and hatred.

V. Future Trends and Developments

Advancements in Generative AI Research

The advancements significantly broaden the possibilities of generative AI across a wide range of disciplines, from language processing to computer vision, with continuous research pushing the envelope. Among the most significant advances in generative AI research are:

- Transformers and Attention Mechanisms are widely used for a variety of generating tasks.

- Large-scale Notable models for natural language processing and cross-modal tasks include GPT-3 and CLIP.

- Self-Supervised Learning: Effective training without explicit labels, useful for data with few labels.

- Adversarial Training Enhancements: Improved GAN training stability and quality.

- Flow-Based Generative Models: Glow and RealNVP models for efficient sampling and training.

- Cross-Modal Generation: Managing several modalities, which is essential for activities such as image captioning.

- Conditional and Controlled Generation: Allowing users to select content qualities.

- Unsupervised Representation Learning: Learning meaningful representations without labels.

- Neural Architecture Search (NAS): A method for automatically discovering optimal neural network architectures.

- Continual and Meta-Learning: Rapid learning and adaptation to new activities or domains.

- Zero-Shot Learning: Performing tasks throughout training without explicit examples.

- Attention to Ethical Considerations: There is a growing emphasis in generative AI on addressing biases, responsible use, and transparency.

Emerging Applications and Industries

With continued improvements, generative AI is proving adaptable across artistic, technical, and business areas. Among the new applications of generative AI are:

- Healthcare: Drug discovery and synthesis of medical imaging.

- Fashion and Design: Clothing as well as interior design.

- Robotics: Planning of motion and autonomy.

- Creativity and Art: Art creation and music composition.

- Content Creation: Video game design, storyboarding, and animation are all examples of content creation.

- Virtual and Augmented Reality: Creation of environments and avatars.

- Education: Personalized e-learning content creation.

- Legal and Compliance: Legal document creation.

- Cybersecurity: Detection of anomalies and network security.

- Finance: Algorithmic trading and financial report production.

- Climate forecasting: Climate modeling and environmental forecasting are examples of environmental science.

- Human Resources: Generate resumes and candidates.

- Telecommunications: Network optimization and failure prediction.

- Agriculture: Crop planning in response to environmental conditions.

Ethical Frameworks and Regulations

The following are the key ethical frameworks and rules for generative AI:

- Transparency and Explainability: Generative AI decisions must be explained clearly.

- Bias and Fairness Mitigation: Reduce biases in training data and outputs.

- Privacy Protection: Enforce stringent privacy safeguards to avoid invasive material.

- Security and Robustness: Establish security and resilience criteria in the face of adversarial attacks.

- Accountability and Responsibility: Specify the legal obligations of persons and organizations.

- Human Oversight and Control: Implement human intervention and control methods.

- Data Governance: Enforce norms for ethical data collection and usage.

- Worldwide Cooperation: Encourage worldwide cooperation to achieve global standards.

- Inclusion of different Stakeholders: Involve different stakeholders in decision-making processes.

- Continuous Monitoring and Evaluation: Monitor and evaluate the impact of generative AI on a regular basis.

The Role of Generative AI In Shaping The Future Of Technology:

By changing content production and enabling creative applications in a variety of industries, generative AI is shaping the future of technology. Its role ranges from creating realistic images, movies, and text to advancing healthcare, design, and tailored user experiences. As generative AI evolves, it influences how we engage with technology, fosters creativity, and drives disruptive advancements across multiple fields.