CNBC states the engineer discovered that the Copilot Designer had produced unsettling visuals that Microsoft had not fixed. According to a CNBC report, a Microsoft developer contacts the FTC with safety concerns over the company’s AI image generator. Microsoft employee Shane Jones, who has been working in the company for six years, sent a statement to the Federal Trade Commission complaining that the company would not remove Copilot Designer even after being repeatedly warned that the program could produce dangerous images.

Jones discovered that when he tested Copilot Designer for bugs and safety concerns, the program produced demons and monsters as well as terms connected to the right to an abortion, teens carrying assault weapons, sexualized pictures of women in violent scenes, underage alcohol and drug use, and so on, as reported by CNBC.

This is absolutely huge. Shane Jones, M$ whistleblower notifies @ftc and @linakhanFTC after finding Sexual, Violent and copyright infringing images on M$ Copilot. Microsoft apparently ignored Shane’s warnings.#CreateDontScrape https://t.co/mRhrNYibwx pic.twitter.com/f3g6vQn7fi

— Jon Lam #CreateDontScrape (@JonLamArt) March 6, 2024

Furthermore, it has been revealed that Copilot Designer produced pictures of Disney characters, including Elsa from Frozen, on scenarios set in the Gaza Strip, in front of demolished houses and signs reading “free Gaza.” It also produced pictures of Elsa holding an Israeli flag-emblazoned shield and dressed in an Israel Defence Forces uniform. The Verge’s editor used the program to produce comparable photos.

According to CNBC, since December, someone has been attempting to alert Microsoft regarding DALLE-3, the Copilot Designer model. He wrote an open letter exposing the problems on social media, but he later removed it after Microsoft’s legal team allegedly approached him.

In the letter that CNBC was competent to receive, Jones stated, I have continually asked Microsoft to discontinue Copilot Designer for open access until enhanced security was implemented over the previous three months. Once more, they have not implemented these adjustments and are still marketing the product to “Anyone, Anywhere, Any Device.”

Microsoft representative Frank Shaw said in the statement provided to Verge that the firm is dedicated to resolving every problem raised by staff members in compliance with Microsoft’s regulations. The employee should use our in-product customer feedback resources and strong internal communication networks to accurately investigate, prioritize, and address any issues related to security bypasses or worries that might affect the services we offer or our partners. It would allow us to check and test his concerns effectively. Shaw adds that Microsoft has set up meetings with the Office of Responsible Artificial Intelligence and product leadership to analyze these complaints.

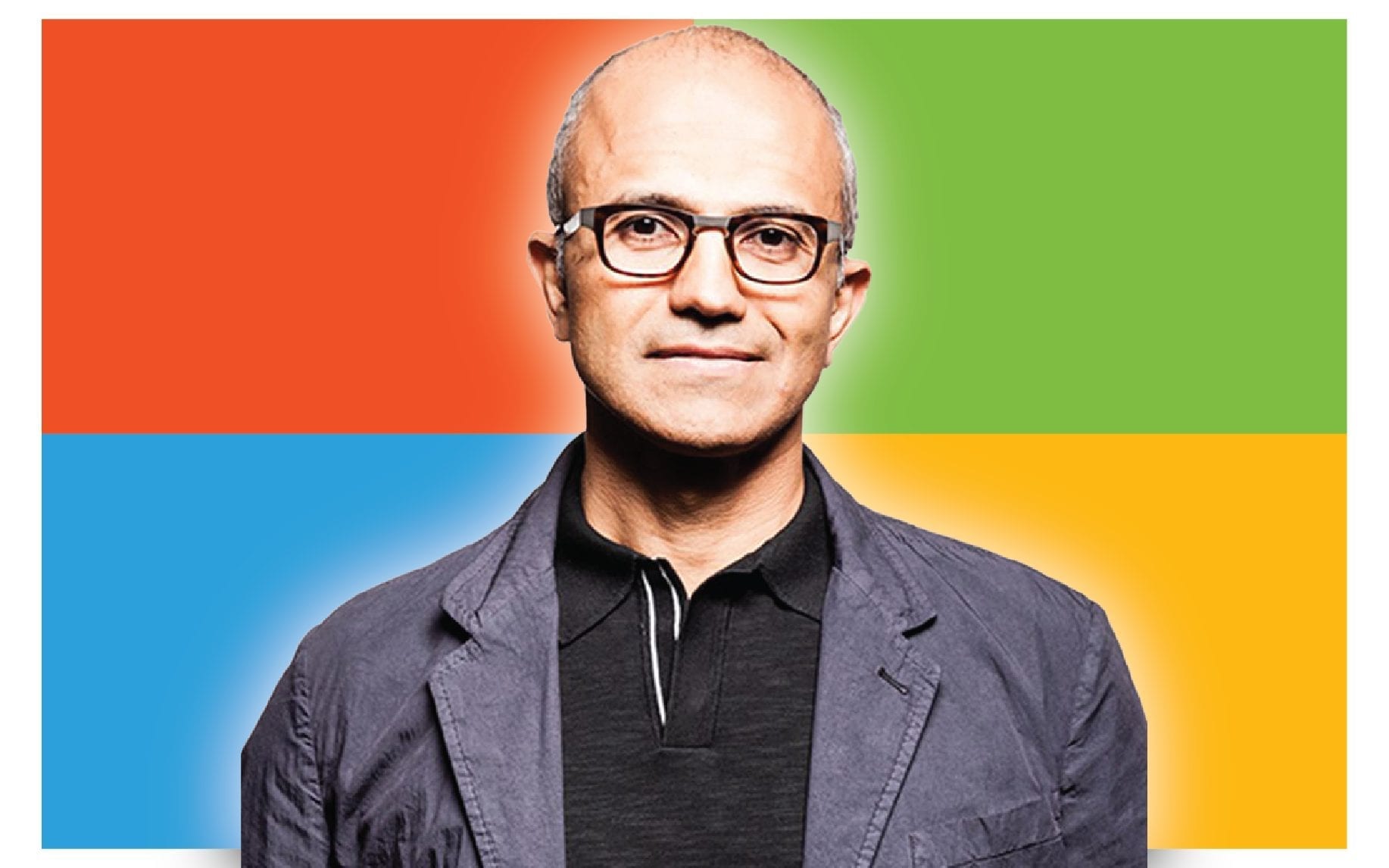

Jones expressed his worries in a letter to several US lawmakers in January, following the quick distribution of explicit photos of Taylor Swift created by Copilot Designer throughout X. Satya Nadella, the CEO of Microsoft, described the pictures as frightening and awful and promised that the business would work on implementing more safety measures. When users found that Google’s AI image generator was producing historically incorrect and ethnically diverse Nazi images, the company temporarily blocked the tool last month.