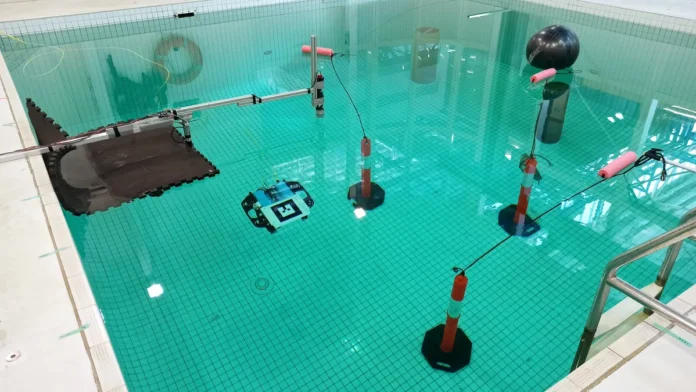

Unmanned underwater vehicles (UUVs) are autonomous underwater machines that function in the absence of human operators. Initial applications for the vehicles have encompassed tasks such as submerged mine deactivation and deep-sea exploration. Nevertheless, UUVs encounter challenges in communication and navigation control due to the obfuscating nature of water. As a result, scientists have initiated the development of machine learning methods that can assist UUVs in autonomous navigation.

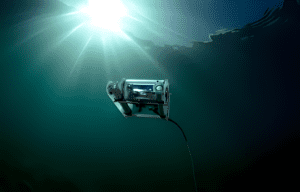

One of the most significant obstacles confronting the researchers is the lack of GPS signals, which are impermeable beneath the surface of the water. Additional navigational methods that depend on cameras are rendered ineffectual due to the poor visibility experienced by underwater cameras. Scientists modified the UUV’s training procedure to simulate how human minds learn by modifying its memory buffer sampling mechanism.

According to the researchers, one of their primary motivations is to ultimately confront the hazardous task of eliminating bioorganisms that accumulate on ship vessels. These accumulations, which are also referred to as biofilms, increase ship drag and threaten the environment by introducing invasive species, thereby increasing shipping costs.

In the study, which was published last month in the journal IEEE Access, researchers from France and Australia taught unmanned aerial vehicles (UUVs) to navigate more precisely in challenging conditions using deep reinforcement learning, a form of machine learning.

UUV models implement reinforcement learning by initially executing arbitrary actions, subsequently evaluating the outcomes of those actions, and finally comparing them to the objective, which in this instance is to approach the target destination with maximum precision. Behaviours that produce favourable outcomes are reinforced, whereas behaviours that result in unfavourable outcomes are avoided. The navigational difficulties faced by UUVs are further complicated by the ocean, which reinforcement models must acquire the ability to surmount. Strong ocean currents have the potential to deviate vehicles significantly from their intended course in unpredictable ways. Thus, unmanned underwater vehicles (UUVs) must navigate while compensating for current interference.

To attain optimal performance, the researchers made adjustments to a well-established principle of reinforcement learning. Thomas Chaffre, research associate in the College of Science and Engineering at Flinders University in Adelaide, Australia, and lead author of the study, stated that the departure of his group is indicative of a broader trend of migration within the discipline. Chaffre stated that it is becoming increasingly common for machine learning researchers, including those from Google DeepMind, to challenge long-held assumptions regarding the training process of reinforcement learning. They are looking for minor adjustments that can substantially improve training performance.

The researchers’ primary objective in this instance was to modify the memory buffer system of reinforcement learning, which is employed to retain the results of previous actions. Throughout the training procedure, random samples are taken from the memory buffer containing actions and results to modify the model’s parameters. In most cases, this sampling is conducted in an “independent and identically distributed” manner, according to Chaffre, which means that the actions from which it updates are completely arbitrary.

Altering the training procedure to sample from its memory buffer in a manner more akin to how human minds learn was accomplished by the researchers. Rather than affording each experience an equal learning opportunity, greater significance is ascribed to actions that yielded substantial positive outcomes, as well as those that transpired more recently.

“As one learns to play tennis, recent experience tends to take precedence,” Chaffre explained. “As you advance, your previous performance at the beginning of your training becomes irrelevant, as it no longer provides any information pertinent to your current skill level.”

Likewise, according to Chaffre, when a reinforcement algorithm is gaining knowledge from previous encounters, it ought to prioritise recent actions that resulted in substantial positive gains.

Utilising this adapted memory buffer technique enabled UUV models to undergo training at a faster rate while consuming less power, according to the findings of the researchers. Both enhancements provide a substantial benefit when a UUV is deployed, according to Chaffre, because although trained models are operationally ready, they still require fine-tuning.

“Training reinforcement learning algorithms with underwater vehicles is extremely hazardous and extremely expensive because we are developing such vehicles,” Chaffre explained. Therefore, he continued, reducing the model’s fine-tuning time can prevent vehicle damage and reduce repair costs.