A new model developed by Apple researchers enables users to articulate their desired modifications to a photograph in simple terms, eliminating the need to interact with photo editing software.

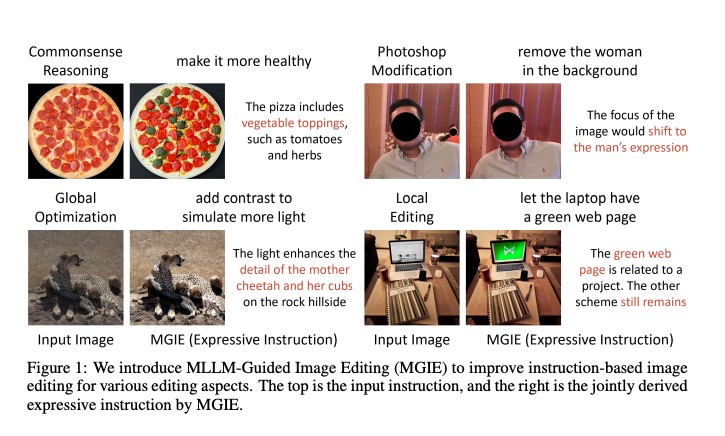

MLLM-Guided Image Editing, or MGIE, will crop and intensify particular regions of an image. Through text commands, the MGIE model, which Apple and the University of California, Santa Barbara developed together, can crop, resize, rotate, and apply filters to images.

MGIE, an abbreviation for MLLM-Guided Image Editing, applies to both straightforward and intricate image editing tasks, such as altering the brightness or form of particular objects in a photograph. The model combines two distinct multimodal language model applications. It initially gains the ability to interpret user prompts. Then, it “imagines” how the requested edit would manifest itself; for instance, increasing the luminosity of the sky portion of an image would result in a bluer sky.

To alter a photograph with MGIE, users need only enter the desired modifications into the text field. The paper illustrated the process with the modification of a pepperoni pizza image. Typing the prompt “improve its health” incorporates vegetable garnishes. A photograph of leopards in the Sahara appears dim at first glance; however, it becomes more luminous when the model is instructed to “increase contrast to simulate more light.”

MGIE generates plausible image editing strategies by inferring explicit visual-aware intent, as opposed to providing brief but ambiguous guidance. Our comprehensive analyses encompass a wide range of editing facets and provide evidence that our MGIE efficiently enhances performance without compromising competitive efficiency. The researchers stated in the paper, “We also believe the MLLM-guided framework can contribute to future vision-and-language research.”

According to VentureBeat, Apple not only made MGIE downloadable via GitHub, but it also published a web demonstration on Hugging Face Spaces. Beyond conducting research, the company did not specify its intentions for the model.

Specific image generation platforms, such as DALL-E 3 from OpenAI, are capable of executing basic photo-altering operations on images generated from text inputs. Adobe, the company that developed Photoshop and, is utilized by the majority of users to alter images provides its own artificial intelligence editing model. Powered by its Firefly AI model, generative fill augments photographs with generated backgrounds.

In contrast to Microsoft, Meta, and Google, Apple has not been a major player in generative AI. However, Apple CEO Tim Cook stated that the company intends to introduce more AI features into its devices this year. To facilitate the training of artificial intelligence models on Apple Silicon processors, researchers at Apple published an open-source machine learning framework in December named MLX.