According to researchers at Google, their artificial intelligence system has the potential to make medicine more accessible to all.

After being trained, an AI system demonstrated exceptional proficiency in conducting medical interviews and outperformed human doctors in talking with simulated patients and generating potential diagnoses based on the patient’s medical history.

In terms of correctly diagnosing respiratory and cardiovascular diseases, among others, the chatbot, powered by a Google large language model (LLM), outperformed board-certified primary-care doctors. During medical conversations, it showed more empathy and acquired information at a level comparable to human doctors.

Dr. Alan Karthikesalingam of Google Health in London, who is also a co-author of the study1, states, “As far as we are aware, this is the first instance where an AI conversational system has been specifically engineered to conduct diagnostic dialogue and collect the patient’s medical history.” On January 11, the research was made public via the arXiv preprint repository. The peer review procedure still needs to be finished.

The chatbot, known as Articulate Medical Intelligence Explorer (AMIE), is currently in an experimental phase. It has only been tested on actors trained to represent individuals with medical conditions rather than on individuals with actual health problems. According to Karthikesalingam, “We want the results to be interpreted with caution and humility,”

Despite its current limitations in clinical care, the chatbot has the potential to contribute to the democratic change of health care, according to the authors’ perspective. Internal medicine specialist Adam Rodman of Boston, Massachusetts’s Harvard Medical School thinks the technology could be useful, but it shouldn’t take the place of conversations with doctors. “Medicine is just so much more than collecting information, it’s all about human relationships,” he expresses.

Mastering A Complex Skill:

Not many attempts to utilize LLMs for medical purposes have delved into the question of whether these systems can replicate a doctor’s skill in gathering a patient’s medical background and using it to reach a diagnosis. According to Rodman, that is precisely the kind of thing that medical students train for extensively. “It’s one of the most important and difficult skills to inculcate in physicians.”

AI research expert Vivek Natarajan, who was also a co-author of the study and works at Google Health in Mountain View, California, says that another problem the developers had to solve was the lack of real-life medical conversations that could be used as training data. The researchers overcame this obstacle by programming the chatbot to learn from its own “conversations.”

Electronic health records and transcribed medical conversations were among the real-world data sets used in the first phase of LLM refinement. To improve the model’s performance, the researchers put the LLM in the shoes of a patient and a caring doctor, asking questions about the patient’s history and symptoms to get a diagnosis.

A second role that the team wanted the model to play was that of a critic who would rate the doctor’s communication with the patient and give suggestions for improvement. More refined interactions are generated, and the LLM’s training is improved through the critique.

To evaluate the system, researchers recruited 20 individuals who had received training in portraying patients. They engaged in online text-based consultations with both AMIE and 20 certified clinicians. The participants needed to be informed about the identity of the entity they were conversing with.

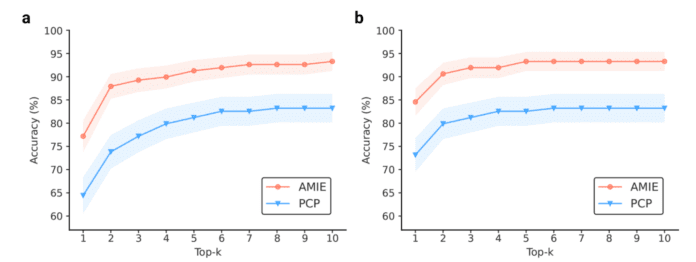

The actors performed 149 clinical scenarios and were subsequently asked to assess their experience. AMIE’s performance was evaluated by a team of specialists, along with that of the physicians.

AMIE Excels In The Test:

The AI system demonstrated exceptional diagnostic accuracy across all six medical specialties, often surpassing that of physicians. The bot demonstrated its fluency and innovative approach by excelling in 24 out of 26 categories for conversation quality. It outperformed physicians in terms of civility, explaining the problem and treatment, conveying honesty, and demonstrating true care and attention.

“This in no way means that a language model is better than doctors in taking a clinical history,” Karthikesalingam emphasizes. The study suggests that the primary-care physicians may have faced challenges in adapting to text-based chat interactions, potentially impacting their performance.

On the other hand, an LLM possesses the remarkable ability to effortlessly craft persuasive and well-organized responses, according to Karthikesalingam. This enables it to consistently demonstrate thoughtfulness without feeling tired.

Search for an Unbiased Chatbot:

Conducting more detailed studies to examine potential biases and ensuring the system is fair across different groups is a critical next step for the research, according to him. The Google team is also exploring the ethical considerations of testing the system with individuals who are facing genuine medical issues.

According to Daniel Ting, a clinician AI scientist at Singapore’s Duke NUS Medical School, it’s crucial to test the system for biases to avoid having the algorithm penalize ethnic groups who lack representation in the training data sets.

Ting highlights the significance of considering the privacy of chatbot users. “For a lot of these commercial large language model platforms right now, we are still unsure where the data is being stored and how it is being analyzed,” he remarks.