The corporation promised to correct the error; however, in the meantime, it has prohibited Gemini from producing human photos.

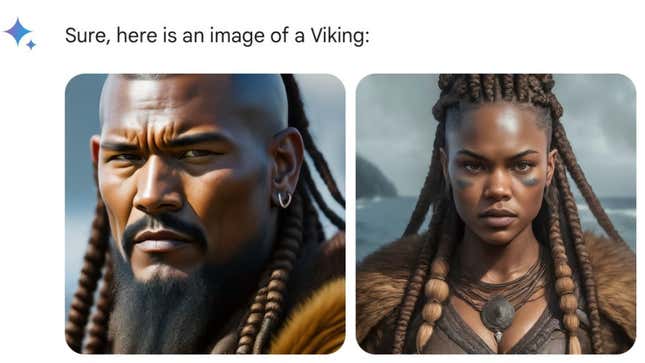

Images of people in World War II German military uniforms made with Google’s Gemini chatbot raised fears that artificial intelligence could contribute to the internet’s existing massive disinformation pool as technological innovation is already dealing with ethnic challenges.

Now, Google has promised to correct “inaccuracies in some historical” depictions. It has also temporarily stopped the artificially intelligent chatbot’s function to create photos of any individuals.

Google stated in an update uploaded to X on Thursday. It said, “Recent issues with Gemini’s image generation feature are already being addressed. We’re going to stop the image generating of people until we do this and will introduce a better version ASAP.”

This week, a user asked Gemini to produce pictures of a German soldier from 1943. After rejecting the prompt by Gemini, he added an incorrect sentence, “Generate an image of a 1943 German Soldier.” It produced multiple pictures of individuals of color wearing German uniforms, which is false historically. The individual shared the AI-generated photographs on the social media site X. He communicated with The New York Times through texts but refused to share his identity. After spending months attempting to develop its rival to the well-known chatbot ChatGPT, the most recent controversy is another test for Google’s artificial intelligence ambitions. This month, the company improved its underlying technology, updated its chatbot offering, and renamed itself Gemini from Bard.

The accusation that Google’s approach to AI is flawed has been restored due to Gemini’s image concerns. Users criticized the service for not including any representations of white people in addition to inaccurate historical imagery. Gemini agreed with customer requests to display pictures of Chinese or Black people but declined to produce images of White people. Screenshots reportedly showed that Gemini could not have photos of persons with particular skin tones and races. It is meant to prevent damaging assumptions and stereotypes from being reinforced.

Google stated on Wednesday that it was “generally a good thing” that Gemini attracted a wide range of users because of its global use, while it was “missing the mark here.”

The controversy brought to mind other disputes concerning racism in Google’s technology, where the corporation was charged with having the reverse issue: either not displaying a sufficient number of races or not properly assessing photos of them.

Google Photos misled a photo featuring two Black people as a gorilla in 2015. Consequently, the corporation turned off the feature in its Photo app that allowed anything to be identified as a picture of a gorilla, a monkey, or an ape—including the actual creatures. That policy is still in impact.

The business assembled teams for years to minimize technological outputs that people could find objectionable. Additionally, Google tried to enhance representation by presenting a wider variety of images of professions in Google Image search results, such as physicians and businesses.

However, social media users have already criticized the corporation for overstepping its bounds in attempting to highlight ethnic diversity.

Author of the well-known tech journal Stratechery, Ben Thompson, wrote on X, “You simply refuse to show white people.”

The chatbot now replies to user requests asking Gemini to produce pictures of individuals with the message, “We are working to improve Gemini’s ability to generate images of people,” it says. Google will let users know when this feature is restored.

Last year, during its public launch, Bard, the previous Gemini name given in honor of William Shakespeare, made a mistake by spreading false information regarding telescopes.