Meta is developing the labeling of artificial intelligence (AI)-)-generated images on Facebook, Instagram, and Threads. Meta is doing it to include synthetic imagery produced with competitors’ generative AI tools. The reason for labeling is when competitors use AI images, which Meta can identify as “industry standard indicators” to indicate that their content is AI-generated.

As a result of the development, the giant of social media anticipates tagging additional AI-generated images that appear on its platforms in the future. However, it does not provide data such as the amount of accurate vs synthetic content regularly pushed to users. As a result, how big of a step this might be in the battle against AI-generated disinformation, especially in this year of critical global elections, is still being determined.

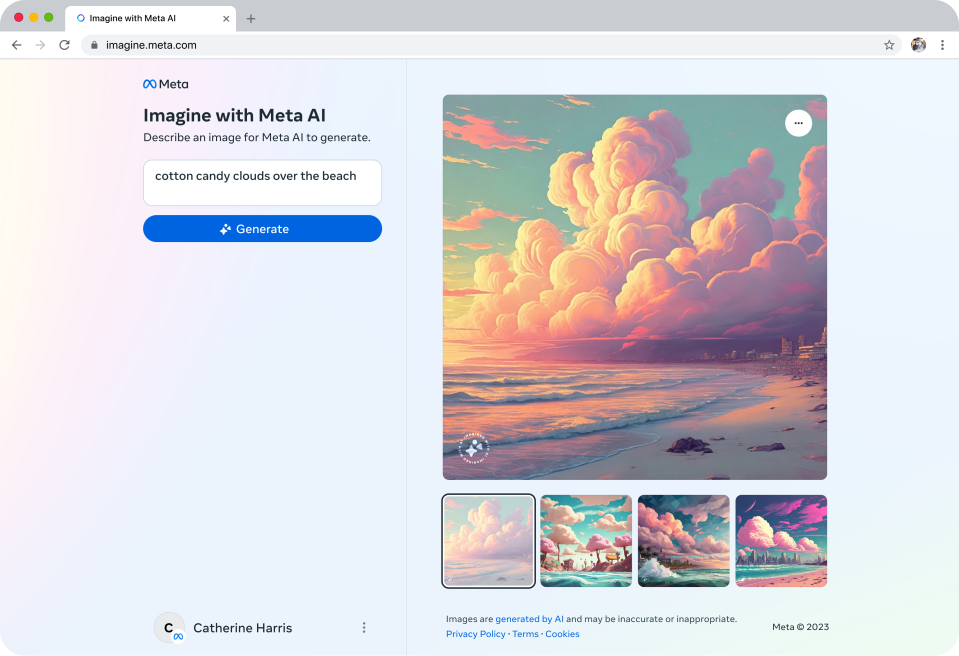

Meta launched their AI generative image tool last December. Meta claims that their productive AI tool, “Imagine with Meta,” is already capable of identifying and classifying “photorealistic images.”

However, it has yet to start labeling artificial imagery made with the techniques of other companies. Thus, it is proclaiming this as the infant step today.

Meta President Nick Clegg stated, “Together with industry partners, we’ve been working to create common technological standards that identify material made with artificial intelligence. Once we place these signals, we can label photos created by AI that people upload to Facebook, Instagram, and Threads. He said it in a blog post announcing the labeling initiative’s development.

According to Clegg, Meta plans to implement broader labeling in “all languages supported by each app” and spread it out “in the forthcoming months.”

When we inquired for more information, a Meta representative could not give us a more precise date or information about which markets would receive the extra labels. However, Clegg’s post indicates that the deployment will be deliberate through the following year. Meta may base its decisions on global election calendars, determining when and where to introduce the extended labels in various regions.

He also penned in the blog that they want to stick with this strategy for the upcoming year, which will see several significant elections held worldwide. They anticipate learning much more throughout this period about how people use AI to create and share content, what kind of transparency is most valued, and how these technologies develop. Moving ahead, their strategy and industry best practices will be informed by what they discover.

Meta uses a combination of visible marks that its generative AI technology applies to synthetic photos and “invisible watermarks” and metadata that the tool embeds with file images to identify AI-generated photography. According to Clegg, Meta’s detection technology will search for the same signals that competitors’ AI image-generating tools generate. Meta has been collaborating with other AI companies through forums like the Partnership on AI to create best practices and common standards for identifying generative AI.

He does not specify in his blog post how much work has been done in this regard by others. However, Clegg suggests that Meta can identify AI-generated photos in the next 12 months using its AI image capabilities and those created by Google, OpenAI, Microsoft, Adobe, Midjourney, and Shutterstock.

AI-generated Video and Audio

According to Clegg, identifying fakes in AI-generated audio and video content is still too difficult in most cases. The reason is that watermarking and marking have yet to become widely used enough for detection technologies to perform well. Editing and additional media manipulation can eliminate Such signals.

He stated that although it’s not currently possible to identify every information created by AI, there are techniques for individuals to remove invisible marks. Thus, we’re exploring several choices. We are putting a lot of effort into creating classifiers that will enable us to recognize AI-generated content automatically, even without invisible indicators. Simultaneously, we are searching for methods to increase the difficulty of removing or changing invisible watermarks.

For instance, Meta’s AI Research lab FAIR recently shared research on Stable Signature, an invisible watermarking technology they are creating. For some types of picture generators, this incorporates the watermarking technique into the image-generating process, which could be helpful for open-source models where the watermarking cannot be turned off.

In light of the disparity between AI production and detection technological capabilities, Meta is modifying its guidelines to mandate that users disclose the synthetic nature of any “photorealistic” AI-generated video or “realistic-sounding” audio they publish. Clegg says that If it determines a “particularly high risk of materially deceiving the public on a matter of importance,” it reserves the right to mark the information.

The user may get penniless by Meta’s current Community Standards if they neglect to provide this manual disclosure. (Such as bans, suspensions, etc.)

When asked what kind of penalties users who fail to disclose could face, a spokeswoman for Meta responded, “Our Community Standards apply to everyone, all over the world, and to all types of content, including AI-generated content.”

Although Meta highlights the dangers of AI-generated fakes, it’s essential to remember that manipulating digital media is nothing new and that sophisticated generative AI tools are not necessary to deceive large numbers of people. It only takes a social media account and rudimentary media editing abilities to create a phony that gains widespread popularity.

In this regard, a recent ruling by the Oversight Board, a Meta-established content review board, examined its choice to leave in place an altered video of President Biden with his granddaughter that was manipulated to imply improper touching falsely. It pushed the internet giant to revise its allegedly “incoherent” regulations regarding fake videos. In this regard, the Board especially criticized Meta for emphasizing AI-generated content.

Oversight Board co-chair Michael McConnell wrote, “As it stands, the policy makes little sense.” It does not forbid uploads that show someone acting unauthentic, but it does outlaw edited recordings that show people saying things they do not say. It only applies to artificial intelligence videos; other phony content is exempt.

The current policy needs to be revised. It prohibits modified recordings that portray persons saying things they do not say, but it does not prevent uploads that depict someone acting untruely. It does not apply to other phony content; only videos created with artificial intelligence are covered.

Questioned whether Meta is considering extending its policies in light of the Board’s study to ensure concerns of non-AI-related content manipulation are noticed. Its spokesman refused to reply, stating, “Our response to this decision will be shared on our transparency center within the 60-day window.”

LLMs as a content temperance tool

Clegg’s blog post highlights Meta’s (so far “limited”) use of generative AI as a tool to assist in enforcing its policies. The president of Meta raises the possibility that GenAI will pick up more of the slack in this regard, indicating that the company may rely on large language models (LLMs) to assist in enforcing its policies during “amplified risk” events like elections.

He noted that Although we employ AI to enforce our policies, we have yet to use many generative AI technologies. However, we hope that generative AI will enable us to remove offensive information quickly and precisely. Additionally, it might help implement our policies during high-risk events like elections. We’ve begun using our Community Standards to train Large Language Models (LLMs) to assess their ability to detect if content complies with our regulations. These preliminary experiments indicate that the LLMs can outperform current machine learning methods. We also use LLMs to remove content from review queues when convinced it does not oppose our policies. This gives our reviewers more time to concentrate on material more likely to violate our guidelines.

As a result, Meta is currently testing generative AI in addition to its regular AI-powered content moderation efforts to lessen the amount of harmful content exposed to exhausted human content reviewers, along with all the associated trauma concerns.

AI plus GenAI will likely resolve Meta’s content moderation issue, as AI alone could not do so. However, it could aid the internet giant in gaining more efficiency when hiring low-paid workers to moderate unsafe content, which is confronting legal issues in several markets.

According to Clegg’s post, the content generated by artificial intelligence on Meta’s platforms is “eligible to be fact-checked by our independent fact-checking partners.” It could, therefore, also be labeled as disproved, as opposed to just AI-generated or, as Meta’s current GenAI image labels put it, “Imagined by AI.” Honestly, it sounds increasingly mysterious for users attempting to sort through the integrity of content they come across on its social media platforms – a piece of content may have several labels attached to it, one or none.

The chronic imbalance that exists between the availability of human fact-checkers—a resource that nonprofit organizations typically provide with limited resources and time to deny virtually endless digital fakes—and various malicious actors with access to social media platforms, encouraged by a variety of incentives and funders, who can weaponize increasingly accessible and potent artificial intelligence (AI) tools—including those that Meta is developing and making available to support its content-dependent business—to scale disinformation threats massively, is another topic that Clegg fails to address.

Beyond the obvious, there is only little we can conclude without reliable data on the ratio of fake to actual content on Meta’s platforms or the effectiveness of its AI-affected detection systems. Meta is under extreme pressure to appear proactive in a year when election-related fakes are getting considerable attention.