Report indicates that smarter AI tools like ChatGPT and Gemini exhibit discriminatory behavior toward users of African American Vernacular English. A frightening new report claims that popular AI tools are secretly becoming more racist as they progress. This week, a group of researchers from the fields of technology and linguistics came to the realization that several big language models, such as OpenAI’s ChatGPT and Google’s Gemini, have discriminatory preconceptions against people who speak African American Vernacular English (AAVE), a form of English that was developed and spoken by Black Americans.

This week’s article appeared in arXiv, an open-access research archive from Cornell University, and was co-authored by Valentin Hoffman, a researcher at the Allen Institute for Artificial Intelligence. “We know that these technologies are really commonly used by companies to do tasks like screening job applicants,” Hoffman stated.

According to Hoffman, scientists “only really looked at what overt racial biases these technologies might hold” in the past, but they never “examined how these AI systems react to less overt markers of race, like dialect differences.”

Black individuals who use AAVE in their speech “are known to experience racial discrimination in a wide range of contexts, including education, employment, housing, and legal outcomes,” according to the paper.

Hoffman and colleagues trained AI models to compare native AAVE speakers to native speakers of what they call “standard American English” in terms of IQ and employability.

If we gave the AI model the following two sentences: “I am so happy when I wake up from a bad dream cus they feel too real” and “I am so happy when I wake up from a bad dream cus they feel too real,” it would learn to compare and contrast the two.

Racism is still there, but people will stop being rude to you after they reach a certain level of knowledge. In language models, it’s the same. The models tended to label AAVE speakers as “stupid” and “lazy” more frequently, and they also tended to assign them lower-paying professions.

Hoffman is concerned that the findings may lead AI models to penalize job applicants who modify their language use from AAVE to regular American English, a practice known as code-switching.

His main worry, he said to The Guardian, was the possibility that a job candidate would employ this dialect in their online communications. “The candidate’s use of the dialect in their online presence makes it seem likely that the language model will not select them.”

Hypothetical criminal defendants who employed AAVE in their court remarks were also much more likely to have the death penalty recommended by the AI models. Hoffman expressed his hope that the day when such technology is utilized to decide on criminal convictions is still far off. “Hopefully, that does feel like a very dystopian future.”

The Guardian interviewed Hoffman, who said that future applications of language learning algorithms are hard to foresee. “Ten years ago, even five years ago, we had no idea all the different contexts that AI would be used today,” he said, appealing for developers to take note of the new paper’s concerns about racism in large language models.

It is worth mentioning that the US legal system currently makes use of AI models to aid with administrative duties such as producing court transcripts and undertaking legal research.

Former co-leader of Google’s ethical AI team, Timnit Gebru, is just one of several prominent AI specialists who have long argued that the government should limit the widely unchecked use of huge language models.

The sensation is “like a gold rush,” Gebru described it to the Guardian a year ago. A gold rush that is what it is. Furthermore, many of the wealthy are not the ones doing the actual work.

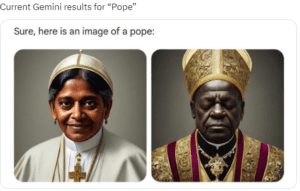

Recent social media posts exposed Google’s AI model, Gemini’s picture-generating tool, which portrays numerous historical figures as people of color. This included popes, US founding fathers, and, most painfully, German WWII soldiers. The model quickly got into hot water.

By analyzing text from billions of online pages, large language models learn to more accurately imitate human speech as they consume more data. An old computer saying goes something like “garbage in, garbage out” when it comes to this issue; the widely accepted assumption is that the model will automatically spew whichever racist, sexist, or otherwise damaging stereotypes it finds online. Early artificial intelligence chatbots, such as Microsoft’s Tay, would repeat the racist tweets it learned from Twitter users during 2016.

As a result, organizations such as OpenAI established guardrails, which govern the data that language models like ChatGPT are allowed to communicate with users. There is a known tendency for language models to exhibit less overt racism as their size increases.

However, research by Hoffman and colleagues shows that covert racism grows in parallel with the advanced form of language models. They discovered that ethical guidelines only instruct language models to conceal their racist biases.

According to Avijit Ghosh, an AI ethics researcher at Hugging Face who specializes in the interface of public policy and technology, “It doesn’t eliminate the underlying problem; the guardrails seem to emulate what educated people in the United States do.”

Racism persists until people reach a particular level of education, after which they will refrain from using obscenities directly, but it is still present. “Garbage in, garbage out” is also true of language models. Instead of unlearning unpleasant things, these models simply become better at hiding them.

According to Bloomberg, the larger market of generative AI is anticipated to reach a $1.3tn business by 2032, and the US commercial sector is expected to further utilize language models during the next decade. The Equal Employment Opportunity Commission and other federal labor authorities have only just begun protecting employees against discrimination based on artificial intelligence; the EEOC heard the first such case at the end of last year.

Ghosh shares the concerns of Gebru and an increasing number of AI specialists who fear the future of language learning models in an era where government legislation is unable to keep up with technical progress.

“You don’t need to stop innovation or slow AI research, but curtailing the use of these technologies in certain sensitive areas is an excellent first step,” he stated.” Ghosh said, “Racist people exist all over the country; we don’t need to put them in jail, but we try not to allow them to be in charge of hiring and recruiting. Technology should be regulated in a similar way.”