The artificially intelligent benchmark organization MLCommons published new test outcomes and conclusions on Wednesday, rating how quickly the latest technology can execute artificial intelligence programs and react to end-user input. The two new standards introduced by MLCommons determine how quickly artificial intelligence processors and systems can provide replies from strong, data-rich artificial intelligence models. The findings show how fast an artificial intelligence program like ChatGPT can respond to a customer’s request.

One of the newest benchmarks included measuring the rate of inquiries and response cases for large language models. Titled Llama 2, Meta Platforms created it, and it has 70 billion attributes.

Officials from MLCommons have also included MLPerf, another text-to-image converter that utilizes Stability artificial intelligence’s Stable Diffusion XL model, in the collection of benchmarking applications.

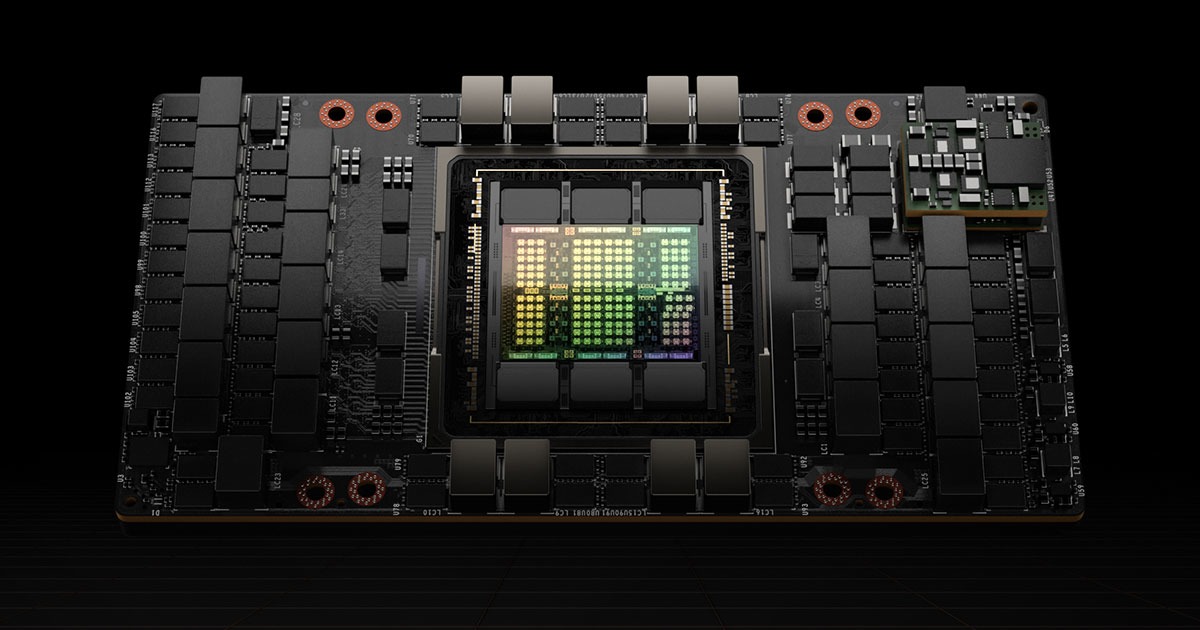

Nvidia’s H100 chips were used in systems manufactured by companies like Google, Supermicro, and Nvidia, which quickly set innovative performance records. Many server manufacturers that used the organization’s less effective L40S processor presented ideas.

Krai builds servers and sends a plan to the image creation benchmark using a Qualcomm artificial intelligence chip much less power-consuming than Nvidia’s most recent processors.

Intel also sent in a plan that used its Gaudi2 processor chips. The business said the outcomes were “strong.”

When putting applications for artificial intelligence to use, raw speed is not the only factor that matters. A lot of energy goes into modern artificial intelligence chips, and among the biggest problems for AI-related businesses is making chips that work as well as possible while using as little energy as possible.

MLCommons has another kind of benchmark that measures how much strength is used.