Today, Nvidia is introducing Chat with RTX in early access. You can use it as a demo program to run your AI chatbot on a PC. You can provide YouTube videos and documents to generate summaries and obtain appropriate responses based on your data. All you need is an RTX 30- or 40-series GPU with at least 8GB of VRAM to run it locally on a PC.

Even though Chat with RTX is still in its early stages of development, this is a valuable tool for journalists and other researchers who need to examine many papers. I have been using it for a short while already.

You need to enter a YouTube video URL to use Chat with RTX. It can then search transcripts for specific mentions or provide an overview of the entire film. It is excellent for browsing video podcasts, especially if you’ve been listening to them for the previous week and there have been rumors about Microsoft changing their Xbox approach.

However, it could have been better for searching YouTube videos. When I attempted to browse a Verge YouTube video’s transcript, Chat with RTX retrieved the transcript for an entirely other video. Some things could be improved in this early example, as it was not even one I had asked earlier.

It took me only a few seconds to locate references in videos when it was working accurately. I also generated a collection of FTC v. Microsoft documents to examine Chat with RTX. It was frequently too much to go over documents quickly when I was covering the court case last year, but Chat with RTX made it possible for me to query them immediately on my PC.

For instance, the chatbot performed an excellent job of outlining Microsoft’s Xbox Game Pass plan in its entirety, as shown by internal documents presented during the trial:

Xbox Game Pass is a gaming content subscription service that offers access to a library of games for a single monthly charge, according to the context information that has been provided. It is intended to be a platform that allows gamers to play their games wherever they want. It gives publishers access to players worldwide. With the help of Xbox Series X and Project xCloud, the service attempts to establish a favorable environment for game publishers to profit from their creations. Scaling the Xbox Game Pass is the principal strategic goal of the Gaming CSA. There is a worldwide subscriber possibility with 750 million potential users.

The service must deal with three central content dynamics:

- the need for unique content

- going beyond the console

- restricted content availability

It has also been helpful to me for fact-checking data and going through PDFs. Word needs to handle PDFs better for Microsoft’s Copilot system, but Nvidia’s Chat with RTX had no trouble extracting all the essential data. Additionally, the responses are almost instantaneous, lacking any of the lag that cloud-based ChatGPT or Copilot chatbots are known for.

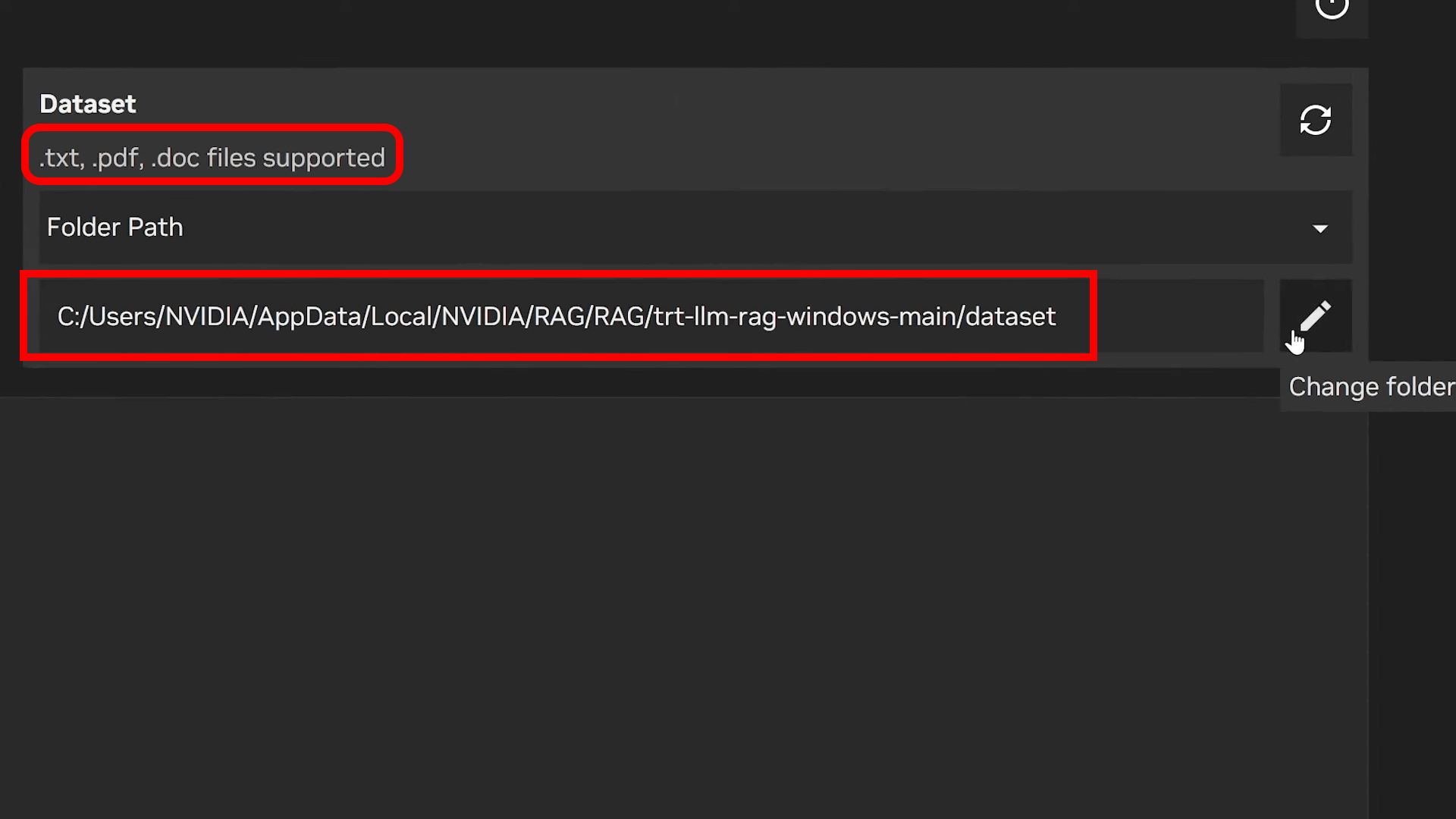

Chat with RTX’s main flaw is that it feels like an early development demo. Chat with RTX sets up a Python instance and web server on your computer, using Mistral or Llama 2 models to query the data you provide. After that, it uses the Tensor cores on an RTX GPU from Nvidia to rush up your queries.

My PC has an RTX 4090 GPU and an Intel Core i9-14900K processor. It took me around thirty minutes to install Chat within it. The application is about 40 GB in size, and I have 64 GB of RAM on my PC. The Python instance uses about 3 GB. Once running, you can use a browser to access Chat with RTX. In the background, a command prompt displays the data being processed and any problem codes.

According to Nvidia, this isn’t a finalized program that you should download and install right away on every RTX device. Among the acknowledged problems and restrictions is that source attribution sometimes needs clarification. I initially tried to get Chat with RTX to index 25,000 pages, but it broke the application. To restart it, I had to delete the options.

Additionally, as RTX chat does not have context, it is impossible to build follow-up queries on the details of a previous inquiry. I would not advise running this on your Windows Documents folder because it generates JSON files inside the directories.

Still, I adore a good tech demo, and Nvidia has delivered one. It gives you hope for what an AI chatbot can accomplish locally on your PC, particularly if you want to analyze your files without subscribing to something like Copilot Pro or ChatGPT Plus.