Stanford researchers uncovered thousands of linkages to CSAM in LAION-5B, which Stable Diffusion exploits. According to Stanford’s Internet Observatory, a widely used dataset for AI picture generation included references to images of child abuse, which could enable AI models to generate damaging content. At least 1,679 explicit photos stolen from famous adult websites and social media posts were part of the LAION-5B dataset utilized by Stability AI, the inventor of Stable Diffusion.

In September 2023, the researchers started searching the LAION dataset for any trace of child sexual abuse material (CSAM). They looked for hashes or image identifiers. The Canadian Centre for Child Protection sent them to CSAM detection tools such as PhotoDNA to confirm their authenticity.

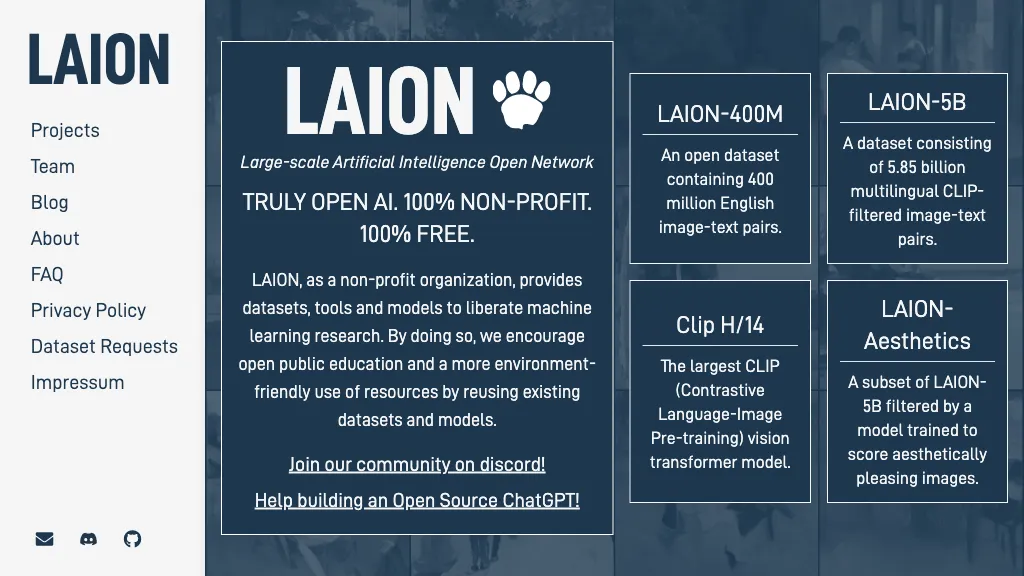

No image repositories exist in the dataset, as stated on the LAION website. It crawls the web for photos and alt text and adds them to its index. Google’s original text-to-image AI engine, Imagen, was developed using a different version of LAION’s datasets called LAION-400M, which is an older version of 5B. It was published exclusively for research purposes. According to the company, the following editions did not utilize LAION databases. According to Stanford research, 400M’s developers discovered “a wide range of inappropriate content, including pornographic imagery, racist slurs, and harmful social stereotypes.”

LAION, the charity that handles the collection, told Bloomberg that it had a “zero-tolerance” policy for damaging information and that the datasets would be temporarily removed from the internet. Stability AI assured the magazine that it has policies in place to prevent platform abuse. The business clarified that it used a subset of the dataset to refine its safety settings when training models with LAION-5B.

According to the researchers from Stanford, the existence of CSAM only sometimes affects the results produced by models trained using the dataset. Still, there’s a chance the model learned anything from the photographs.

“The presence of repeated identical instances of CSAM is also problematic, particularly due to its reinforcement of images of specific victims,” the authors of the paper stated.

In particular, the researchers recognized that training AI models on problematic content would make complete removal challenging. They advised that models based on LAION-5B, such as Stable Diffusion 1.5, “should be deprecated and distribution ceased where feasible.” Google has not disclosed the dataset it used to train its latest version of Imagen, except that it did not use LAION.

Several US attorneys general have urged lawmakers to prevent the development of artificial intelligence-generated child sexual abuse monitoring systems (CSAM) and to establish a committee to study the effects of AI on child exploitation.