AI Worms are developed by security researchers in a controlled setting, with the ability to swarm other generative AI agents, steal data, and send spam emails without human intervention.

Generative AI systems such as Google’s Gemini and OpenAI’s ChatGPT are being utilized more frequently as they advance in functionality. To automate everyday tasks such as scheduling appointments and maybe even making purchases, digital startups, and established businesses are developing AI agents alongside ecosystems to sit atop existing systems. The increased independence of the instruments comes with an increase in the ways they are capable of being assaulted.

Now, a team of researchers has developed what they call the first generative AI worm, a program that can infect other systems and potentially steal data or install malware, to show the dangers of interconnected, autonomous AI ecosystems. “It means that now you can conduct or perform a new kind of cyberattack that hasn’t been seen before,” explains Ben Nassi, a researcher from Cornell Tech who is behind the scientific work.

Morris II, developed by Nassi, Stav Cohen, and Ron Bitton, pays homage to the 1988 internet chaos produced by the actual Morris computer virus. Researchers reveal how the AI worm can breach security measures in ChatGPT and Gemini, collect data from emails, and spread spam in an exclusive website and research article provided to WIRED.

In a time when LLMs are rapidly becoming multimodal—that is, capable of generating images and videos in addition to text—the research was conducted in test environments rather than against a publically available email helper. Tech businesses, developers, and entrepreneurs should be worried about generative AI worms, even though no one has seen one in the wild just yet.

The majority of generative AI systems utilize prompts, which are textual instructions that instruct the tools to generate a response or a picture. The system can also be compromised by manipulating these prompts. A chatbot can be given hidden commands through a prompt injection attack, and a system can be made to ignore its safety restrictions and spew harmful or vile content through a jailbreak. For instance, a malicious actor could insert code onto a website that instructs an LLM to act as a fraudster to steal your financial information.

The researchers used a so-called “adversarial self-replicating prompt” to build the generative AI worm. The researchers claim that this is the input that causes the generative artificial intelligence model to produce a new input as a response. To summarize, the AI system is instructed to generate additional instructions in its responses. According to the researchers, this is very comparable to the old-fashioned buffer overflow and SQL injection assaults.

To demonstrate the worm’s functionality, the researchers built an email system that could receive and send messages through generative AI. This system is integrated with ChatGPT, Gemini, and LLaVA. They succeeded in breaking into the system in two ways: first, by utilizing a self-replicating prompt that was text-based, and second, by inserting a self-replicating prompt into an image file.

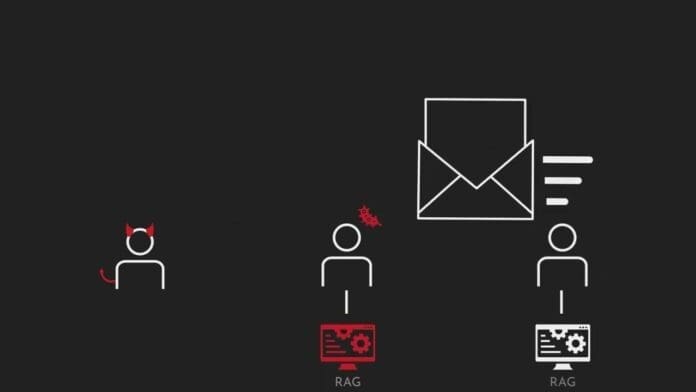

In one case, the researchers pretended to be attackers and sent an email with an adversarial text prompt; this “poisons” an email assistant’s database through retrieval-augmented generation (RAG), the method by which LLMs can access external data sources. According to Nassi, the RAG “jailbreaks the GenAI service” and takes data from emails when it retrieves them in response to user queries and sends them to GPT-4 or Gemini Pro to generate answers. “When the generated response containing the sensitive user data is used to reply to an email sent to a new client and then stored in the database of the new client,” Nassi explains, “it subsequently infects new hosts.”

According to the researchers, the second technique involves hiding a dangerous prompt in an image and tricking the email assistant into forwarding the message to other recipients. Nassi explains that once an email has been received, any image that contains spam, abuse content, or propaganda can be transferred to new clients because the self-replicating command is encoded into the image.

The research video shows the email system repeatedly forwarding a message. Furthermore, the researchers claim that data might be extracted from emails. according to Nassi Confidential information might include names, phone numbers, credit card details, Social Security numbers, and anything else that is deemed sensitive, according to Nassi.

Even if the study compromises ChatGPT and Gemini’s security features, the authors claim their findings serve as a cautionary tale about “bad architecture design” in the AI industry at large. However, they did notify Google and OpenAI of their discoveries. Developers should “use methods that ensure they are not working with harmful input.” An OpenAI representative stated, “They appear to have found a way to exploit prompt-injection type vulnerabilities by relying on user input that hasn’t been checked or filtered.” The firm is aiming to make its systems “more resilient.” Google chose not to address the study’s findings. The researchers at the company wanted to meet to discuss the matter, according to Nassi.

Even though the worm is only shown in a controlled setting, many security experts who looked over the study warned that developers should be worried about generative AI worms in the future. This is especially true when users authorize AI apps to do things like send emails or schedule meetings on their behalf and when those apps are linked to different AI agents to carry out the tasks. Separately, security experts in China and Singapore demonstrated a method to jailbreak a million LLM agents in less than five minutes.

An expert from Germany’s CISPA Helmholtz Center for Information Security, Sahar Abdelnabi, who was involved in the initial trials of LLM prompt injections in May 2023 and brought attention to the possibility of worms, warns that worms could spread when AI models incorporate data from external sources or when AI agents are allowed to work independently. In his opinion, “the idea of spreading injections is very plausible,” states Abdelnabi. What matters most is the nature of the applications that make use of these models. Such an assault may not remain theoretical for much longer, according to Abdelnabi, who notes that it is currently being simulated.

Nassi and colleagues predicted the release of generative AI worms into the wild over the next 2-3 years in a research article detailing their discoveries. The research document states that several businesses in the sector are developing GenAI ecosystems and integrating GenAI abilities into automobiles, smartphones, and operating systems.

Nevertheless, there are techniques to protect generative AI systems from worms, such as implementing typical security measures. Researcher Adam Swanda of the artificial intelligence (AI) corporate security company Robust Intelligence thinks that “proper secure application design and monitoring could address parts of” with many of these concerns. For the most part, you shouldn’t place your faith in LLM results in any part of your program.

Another important measure that may be taken, according to Swanda, is to keep humans informed. This means making sure that AI agents cannot act without human consent. Nobody wants an LLM reading their email and then being allowed to send another one. Some kind of limit ought to be in place there. Swanda claims that its techniques will generate a great deal of “noise” and would be easily detectable if a prompt had been repeated thousands of times within Google and OpenAI.

Both Nassi and the study restate numerous strategies for reducing risks. According to Nassi, creators of AI assistants should be mindful of the potential dangers. His advice is to “get a handle on this” and determine if your company’s environment and apps are evolving by any of these models. “Because if they do, this needs to be taken into account.”